Deployment pipeline: let's do it in the cloud!

How can automation, the public cloud, and AWS and Azure tools help us build a deployment pipeline?

Kamil Kovář

I could start with the old song about how constant change pushes the business to over-develop, which forces us to constantly release software. I don't deny that. But we also want to automate the entire process from code deposition to release to production for two other reasons, where the shirt is closer than the coat. First, deploying repeatedly by hand is a tedious job with lots of human error. And then, the amount of software is growing exponentially with digitization and compartmentalization into microservices. Therefore, we have no choice but to automate all non-creative development activities. How can the public cloud help us in building a deployment pipeline?

In the software development environment, we call the deployment pipeline a system for automatically processing new versioned code from the repository to non-production and production environments.

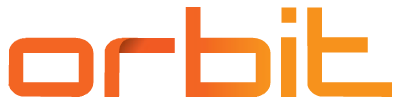

The basic steps from writing new code to running it are usually referred to by the broad term build (but in some areas we narrow this meaning significantly, for example to just building an image). These steps are basically three:

To be sure, if software development is not your daily bread, let's talk about what each step represents.

Compile by

The developer prepares the program code in a development tool (such as Eclipse or Visual Studio), which helps him to create code logic quickly and flawlessly. It saves the code in repositories (e.g. GitHub, Bitbucket), where the code is versioned, combined with the work of other developers, stored in the appropriate development branch, linked to the required change, etc.

The code itself describes what the software should do in the language of your choice, but it needs to be translated into instructions that the processor can take and execute. Therefore, the code needs to be compiled by. This has been an automated process for decades, where a module of the development tool called compiler code compiles and creates a package of executables, libraries, data structures, etc. The package is stored in the repository as another artefact.

This area includes the creation of container image, which puts the compiled package together with the necessary structure of the software and components of the operating system for which the image is intended. The image is stored in a repository (Docker Hub, jFrog Artifactory, AWS ECR, Azure Container Repository etc.).

Testing

A large number of tests can be performed on software at different stages of development - some Automated, some Semi-automated and some by hand. The more frequent releases I require, the more automated tests I need, which someone has to laboriously prepare. Among the well-known tools for automated testing, let's mention the following Selenium.

Before compiling, the code can be tested for quality and safety tools such as SonarQube, continuous code inspection is performed. On the other hand, after the creation of a package or a container, a comprehensive scan of the package's vulnerabilities can be performed, such as. Qualys scanner integrated into the Azure Security Center over containers located in the Azure Container Registry.

Using unit tests I automatically check each module of the program, integration testsI check that the program is capable of transferring data between components, functional testsI'm verifying that the software is working as specified.

Next, after deployment into a given environment/stage, we talk about end-to-end testingfrom the perspective of the user and the entire environment, o acceptance tests formalizing the possibility of moving into production, performance tests verifying the speed of the application in a given environment and about penetration tests detecting whether the application environment is vulnerable to attack.

Deployment

The application environment consists of multiple components, virtual servers, containers, frameworks, databases and network elements. Typically, there are multiple environment, at least testing and production, but robust applications have, for example, a development environment, two testing, training, pre-production and production environments.

Deployment means location compiled by package software to the target location in the filesystem, Implementation needed configurations on the environment and, if necessary, also integration on the surrounding systems. This can be done manually on individual machines using prepared scripts that we run manually, or by automation that allows the entire deployment to be performed in a controlled manner from a central control element. Let's name the most well-known tools Jenkins or Teamcity.

Automatic deployment pipeline

When we talk about an automatic deployment pipeline, we mean automatically performing all three of the above steps (and usually much more). As mentioned, we need tools that allow us to do this automatically:

- Source Code Control

- Compiling code and preparing images

- Testing the release

- Configure the environment

- Monitoring the entire process

The aim is:

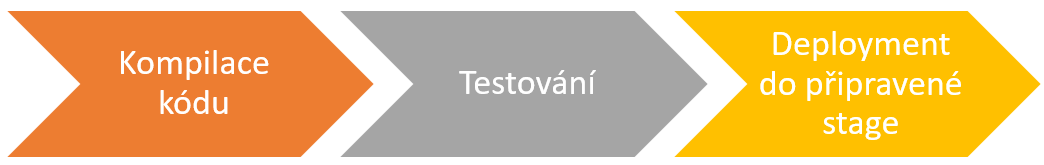

- continuous integration - the ability to integrate code changes from multiple developers into a common package; not to be confused with the need to maintain the ability to continuously integrate with other applications during continuous deployment;

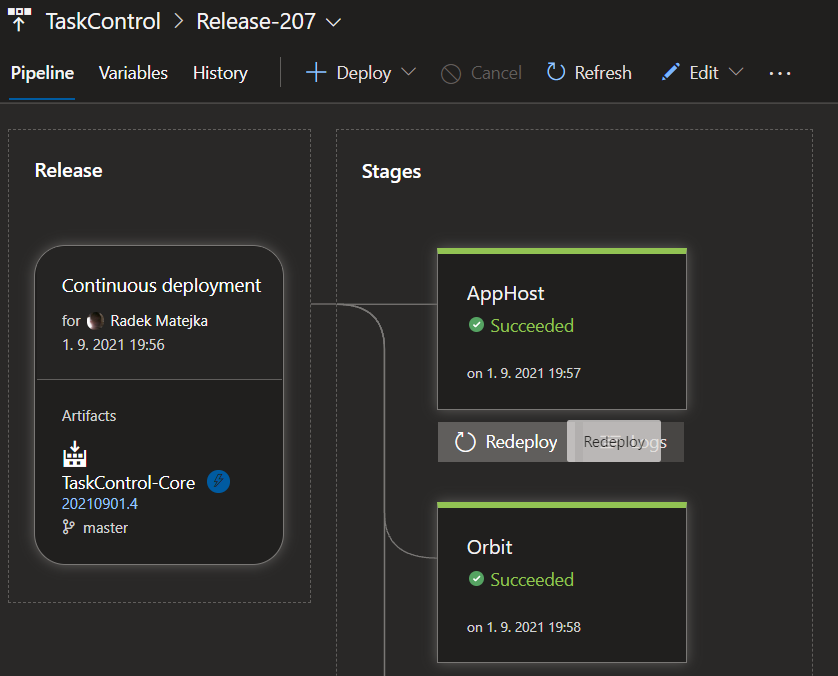

- continuous delivery - after making a change to the code and after manual approval, I am able to automatically deploy different versions of the code to different environments, including production; deployment is started manually at the appropriate time within the release management processes;

- Alternatively continuous deployment - includes complete automated deployment from code tests, unit tests to the actual deployment and any additional functional and non-functional tests on the deployed software in the environment ("one-click").

Maybe you've been on a similar "continuous" terms allergic and I'm not surprised. Also, the word agile...it feels like someone is always saying, "...blood, blood, blood...". But it will be used only once in this text. There are many companies that feed on trends and hypes, inventing and re-utilising modern concepts. But that doesn't change the fact that with the right tools and procedures teamwork on software is more efficient.

Situation A: We already have a pipeline

In a large number of companies, there is already a pipeline for new projects that is at least partially exposed Typically might look like this:

Most Underrated or bypassed are usually the testing and security parts, often for the simple reason of cost and time constraints in development.

Deploying even such a simple pipeline means development and operations teams working together, with security and, of course, business involved. It requires expertise, experience and often weeks of work. In large environments, an entire team of specialists is dedicated to the operation and development of deployment pipelines.

What will it look like in the public cloud?

If we already have experience with automation in the company, moving to cloud services (as we support customers in ORBIT) usually takes place in two ways:

The path of least resistance

If a company is starting to leverage cloud resources, has a working pipeline already built, and sees the cloud as another flexible data center where it draws on IaaS, PaaS, and container resources, it may very well be using existing setup. Common tools such as the aforementioned Jenkins, include plug-ins for both Azure and AWS resources. It is therefore possible to use the public cloud as an additional platform for other stages.

Native tools path

If the public cloud will be the majority or exclusive platform for the company (most of the migration from on-premises is taking place), it is advantageous to use the tools of cloud providers. Microsoft and Amazon have a different approach here.

Microsoft has long been building its development and CI/CD tools, which from Team Foundation Server have converged on the instrument Azure DevOpswhich can be deployed and used both in the cloud and on-premise.

AWS DevOps tools are (unlike Azure DevOps) separate and only some of them can be used.

Let's briefly introduce the offer of both providers. We will not compare, but recall that both cloud platforms provide tools that can be used to control the cloud resources of all major public providers and thus offer the possibility of deployment to a multi-cloud environment. (Read more about DevOps in more detail read in this article.)

Microsoft Azure DevOps

Azure DevOps exists as Server (for on-premise installation) and as Services (as service in Microsoft Azure, but with a "self-hosted" option outside of SaaS). Originally based on products Team Foundation Server a Visual Studio Team Systemassociated with the IDE (development tool) MS Visual Studio. The current cloud product is tailored to work with MS Visual Studio and with Eclipse, uses as its main repository Git and includes tools for the entire DevOps cycle.

It consists of five modules:

Azure Boards

- for planning development work in Kanban style

Azure Pipelines

- for building, testing and deployment in conjunction with GitHub

- supports Node.js, Python, Java, PHP, Ruby, C/C++ and of course .NET

- works with Docker Hub a Azure Container Registry and can deploy to the K8S environment

Azure Repos

- Hosted by Git repository

Azure Test Plans

- support for testing in conjunction with Stories z Azure Boards

Azure Artifacts

- a tool for sharing artifacts and packages in teams

Azure DevOps tools are connected to each other in a single ecosystem, and you can start using any module.

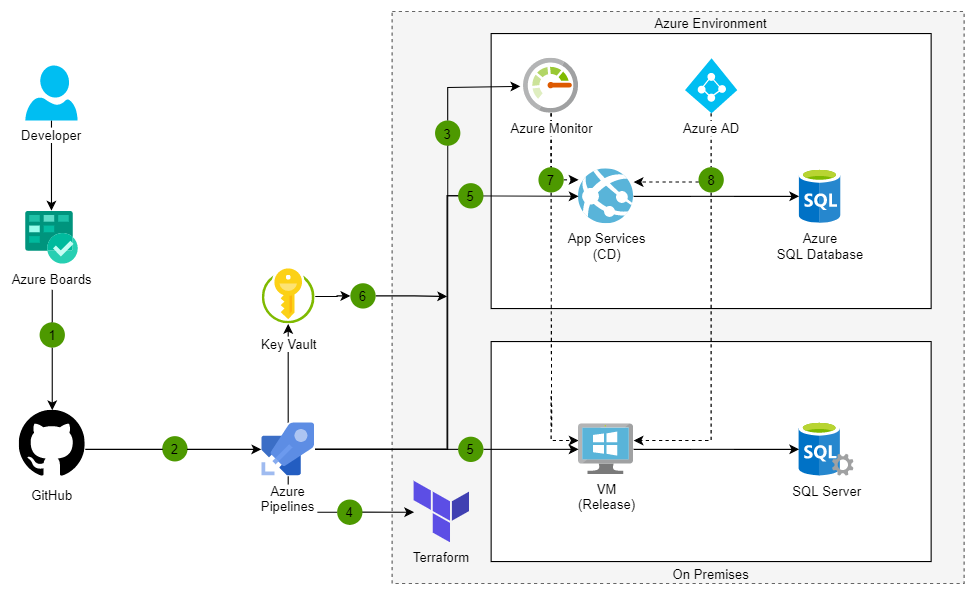

The result could be, for example, this simple architecture for hybrid web application deployment using Terraform:

With limits on the number of users and parallel pipelines, it is possible to use the tools to a limited extent Free (up to five users, up to 2 GB in Artifacts), in the plans Basic (without Test Plans) at five dollars a month per user, along with Test Plans (almost ten times more expensive plan, but testing is testing).

I was recently intrigued by a talk on using Azure DevOps not only for software development for internal and external customers, but also for IT folk who scripts and therefore needs to maintain, version, synchronously run, etc. its scripts. So every IT admin is a developer who can make good use of Azure DevOps. Good idea.

AWS continous deployment tools

Amazon provides number of custom tools and integrated third-party tools from which a complete automatic deployment pipeline.

AWS CodeArtifact

- safe storage of artefacts

- payments for the volume of data stored

AWS CodeBuild

- a service that compiles source code, performs tests and builds software packages

- payments for build time

Amazon CodeGuru

- machine learning system that finds bugs and vulnerabilities directly in the code (supports Java and Python)

- payments by number of lines of code

AWS CodeCommit

- a space for source code sharing based on Gitu

- up to five users for free

AWS CodeDeploy

- automation of deployment to services EC2, Fargate (serverless containers) i Lambda, plus management of on-prem servers

- no fees except for changes to on-prem servers and payment for resources used

AWS CodePipeline

- visualization and automation of various stages in the sw release process

- payment of about a dollar for each active pipeline

To carry out deployment including infrastructure as a code the use of AWS Cloudformation. Individual steps can be triggered for execution using the AWS Lambda. A registry is integrated into the containerisation ecosystem Amazon ECR and the Kubernetessystem Amazon EKS. Another useful service is e.g. Amazon ECR image scanningto identify vulnerabilities in container images.

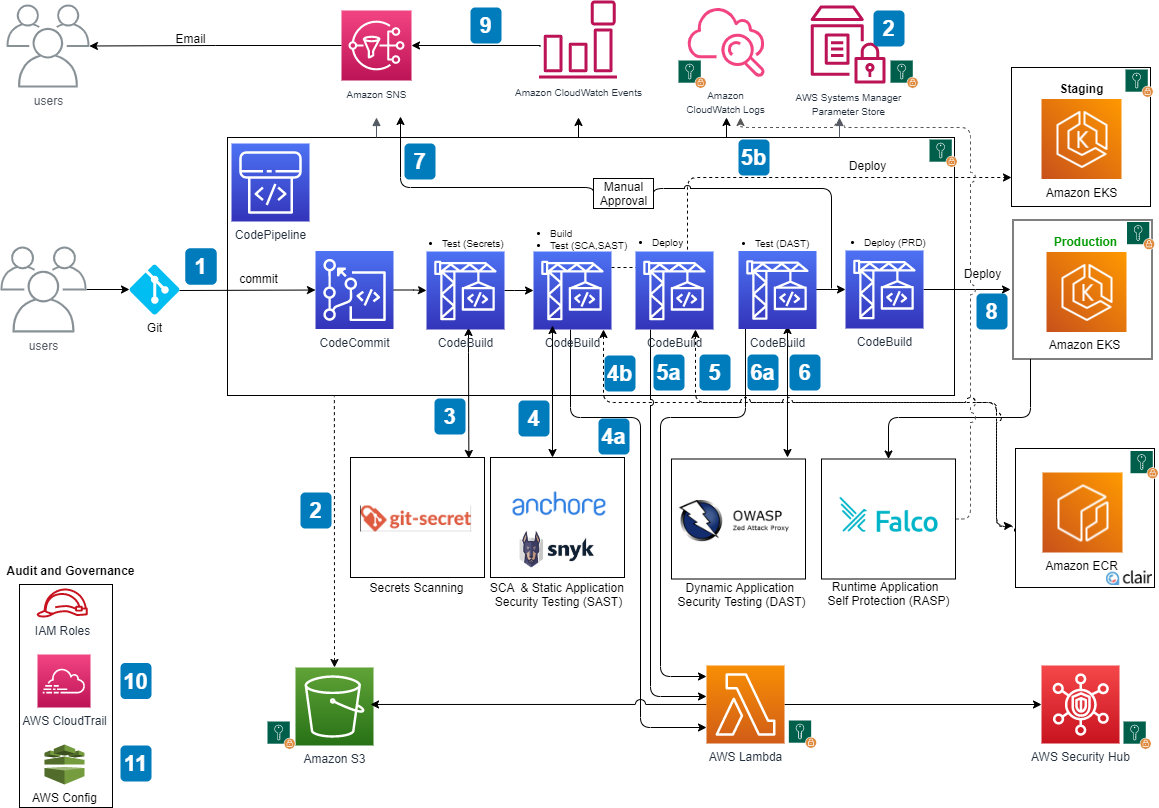

For example, the result might look like this for Amazon DevSecOps deployment pipeline:

Situation B: What if I start on a green field?

Getting started with automation without previous experience means having to get to grips with the current range of tools and choose the right mix for your own use. Without experience, however, it's hard to determine what you'll need throughout the pipeline, and there are usually two extremes:

- a bet on certainty and choice comprehensive commercial solution at full price,

- gradual and tedious component by component construction with unstable results.

Neither approach is optimal in terms of financial and time investment.

Let's start in the cloud

Cloud tools offer a better option here. They include the usual price and functional flexibility with the option of easy to try the tools including full integration. They are built to for immediate use, require no investment and can be easy to scale. Therefore, it is a reasonable compromise between the two extremes mentioned above. You can start small and gradually build a large solution that meets the real needs of the development and operations teams.

So if you are starting from scratch in the cloud or on-premise, I recommend build a deployment pipeline on SaaS services provided in the cloud. You get updated, tested, integrated and secure tools at a predictable cost.

And is this the DevOps?

In this article I have tried to describe the needs and tools for building a pipeline from a technical perspective. The Dev/Sec/Ops approach itself and our view on how it should be implemented, we discuss in this article of our series Cloud Encyclopedia: a quick guide to the cloud.

I'd be glad if you could let me know what your pipeline looks like. Let me know what your cloud preference is - will you stick with the tools you're used to? Do you prefer the AWS toolset? Or do you have a good experience with Azure DevOps?