Containers in the cloud - how to use them in the cloud?

Today, the word container is quite often found in the same sentence as the word cloud. Why is this so? Why are containers so often used in the cloud? Let's explain the history and benefits of containerization and look at how we use them in the cloud.

Kamil Vratny

A little history: Containerisation technology has been available since the late 1970s, but the massive expansion of containers began with the advent of the Docker on Linux.

Docker

When Docker came out in 2013, it immediately gained a lot of popularity, mainly due to its complete set of tools for creating, launching, managing, distributing and orchestrating containers. Docker is included in most Linux distributions, but it can also be used on other operating systems. A public repository can be used to publish and distribute containers Docker Hub.

Docker is still a major player in the container field, but recently there has been significant competition in the form of more specialized tools Podman, Buildah, Skopeo and others. Another change is that Containerd, originally part of Docker, has become a separate project within Cloud Native Computing Foundation. But Docker's main competitive advantage is still the breadth and completeness of the tools it provides.

Container format standards are handled by Open Container Initiative, the project under Linux Foundation. However, Docker containers can also run on Windows Server (2016 or 2019).

What do we need these containers for?

You could say that containers are the next logical step in application distribution and management after the great proliferation of virtual machines (VMs) after 2000.

Computer virtualization has enabled significant simplification of application deployment and more efficient use of resources. However, it has also brought with it the need to run a large number of operating systems. An operating system is something that we need to run an application, but which in itself brings no direct benefit.

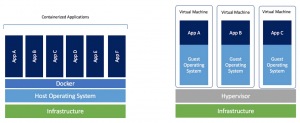

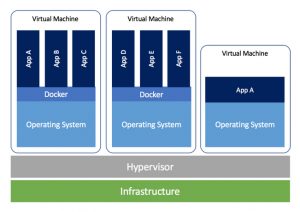

The VM disk image holds the files needed to run the application and the operating system. The container, on the other hand, contains only the files needed to run the application, not the operating system. We can see it in the following picture:

Let's imagine that there are actually two physical servers. While applications A-F on the left run in containers on a single shared operating system, applications A-C on the right each have their own operating system. Comparing the two scenarios, the solution with containers on the left gives us some substantial advantages:

- The operating system is run only once, so it consumes about three times less CPU and RAM.

- The OS was installed only once, so we saved time (even though it may have been automated) and gigabytes of disk capacity.

- The size of the container disk image is dramatically (for example 100×) smaller than the size of the OS disk image with the application.

- Thanks to these savings, we are able to run more applications on the same hardware.

But even more important than the savings on hardware resources are the other features of containers:

- Due to the small size of the container image, we are able to quickly place the container somewhere upload and run. Starting the container takes seconds (starting a VM with an application usually takes minutes). For example, if a server fails, the application can be running on another server in a few seconds. If the number of requests for an application increases significantly, we can run its container on other servers very quickly, thus scaling performance.

- A well-designed container is a clear definition of what the application looks like and what dependencies it uses. We have confidence that every container launch leads to the same resultbecause the start-up is automatic.

- Containerized applications can be run on various platforms on-premises and in the cloud. Containers are very portable.

- Containers simplify some network issues (e.g. I can start the container without assigning another IP address).

- Containers with integrate well with development tools. The result of a well-prepared development line can be automatically created container images waiting impatiently to be run.

- The use of containers can also lead to licensing savings.

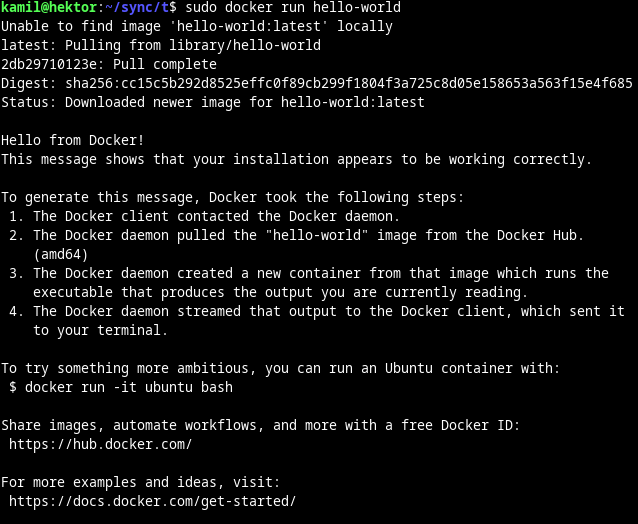

Probably the simplest conceivable container is called hello-world and is available for download on Docker Hub. If you have Docker installed, you can run this container with a single command: docker run hello-world:

What exactly happened? There were several activities:

- Docker has detected that the hello-world container image is not available locally (we would have found this out ourselves with the docker images).

- Docker downloaded the necessary container image from Docker Hub (docker pull).

- Docker created a new container instance from the downloaded image and started it (docker run).

- The container has also terminated after the task in it has finished (the container instance is in the state Exited).

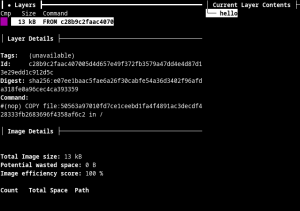

And what does the container image actually look like? It's nothing complicated, it's basically file and metadata archive, usually with multiple layers corresponding to the modifications to be made. To look inside, for example, you can use docker image save or by using the tool dive. The hello-world container contains, in addition to the metadata, a single file called hello:

So I only need HW, OS and Docker (for example) to run containers? In theory, yes, but typical deployment of containers in practice usually looks like running containers in an OS running in a VM, thus taking advantage of the strengths of both technologies:

Container Orchestration

Container orchestration is Automation certain activities related to the operation of containers. While these activities can theoretically be carried out manually, it is only by automating them that we can take full advantage of the benefits that containers bring. In particular, these activities are:

- Distribution of container images

- Central configuration, job scheduling

- Resource allocation, starting and stopping containers

- High availability (in case of a server failure, the application is still available)

- Horizontal scaling (application runs in multiple parallel instances)

- Network services (service discovery, IP addresses, ports, balancing, routing)

- Monitoring, health checks

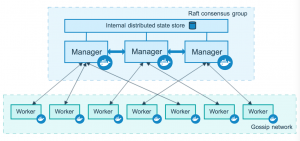

Swarm

Swarm is an orchestration tool that is distributed with Docker. So in a way it's the easiest way, if only because the configuration of Swarm is relatively simple. Cluster nodes are divided into two types - Manager and Worker:

Mirantis, the owner of Docker Enterprise, continues to support the development of Swarm, although it is now focusing more on Kubernetes in the cloud itself.

Kubernetes

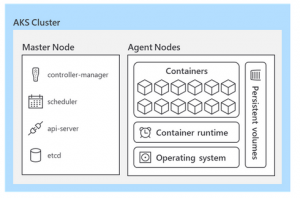

Kubernetes (also K8s) is currently the de facto standard for container orchestration. It was developed by Google (originally for their internal needs, the first version was released in 2015). Kubernetes cluster uses two main types of nodes - Control plane a Worker node:

Although Kubernetes is an open source project, commercial support is available from several major software companies - Red Hat, Rancher (SUSE), VMware and others.

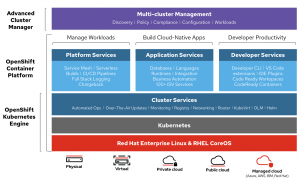

Red Hat OpenShift

OpenShift is a Red Hat commercial product that includes, in addition to the Kubernetes Engine still Container Platform a Advanced Cluster Management. The number of included services is so large that it would be worth a separate article.

The community open source variant of OpenShift (without some features) is called OKD.

How to containerize an application?

Almost any existing application (unless it uses HW resources directly) can be turned into a container. But it is much better if the application was developed from the beginning with the intention of running it as a container. This implies some features that such an application should ideally have:

- The application should be Stateless, the container itself should not store data (it should be in a separate storage - in a database, in a shared cache, on a shared disk, on a shared file system, etc.).

- The app should go quickly and with impunity at any time End and should go easily and quickly Run.

- The application should be able to run in parallel so that it can be Scale up.

- The application should do just one thing (microservices concept).

- The application configuration should be passed by environmental variables (so the configuration is not part of the container).

But also containerization of legacy applications can make sense in a surprising number of cases - the benefits can be, for example:

- automation and acceleration of development and deployment,

- simplification of activities related to the operation of the application,

- reduction of HW intensity,

- easier deployment in the cloud,

- pressure to modernize applications in the organization.

The way the container is created depends on the tool used. A container image is usually created by taking an existing image and deriving a new image from it by adding application files and dependencies. In the case of Docker, for example, we would use a file Dockerfile (to define the modifications to be made to the container) and the docker build to create an image.

But there is often a much simpler way - to use an existing finished container image. In this case, I just need to start the container with the appropriate parameters, or connect the necessary volume.

Containers in the cloud

Finally, we come to the cloud: so how does the cloud relate to containers? If we have a container image ready, we are able to relatively easy to deploy and operate in the cloud. In addition, the containerized application has features (mentioned above) that make it easier to operate in the cloud, regardless of the cloud provider chosen.

Which specific cloud services can we use today to run containers? Let's take a look at the two largest cloud providers:

AWS (Amazon Web Services)

Amazon Web Services includes several services that allow you to run containerized applications, but they differ in deployment method and features. Let's describe the registry ECR, almost serverless Fargate, standard ECS a ECC using Kubernetes, Lambdu, Elastic Beanstalk and the ability to run the Red Hat platform OpenShift directly on AWS services.

ECR

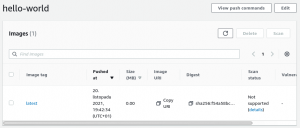

Elastic Container Registry is a highly accessible service that allows storing and sharing container images. Access to containers is controlled by access rights. The entire container image lifecycle is managed - versioning, tags, archiving. Containers stored in ECR can be used in other AWS services (see below) or simply distributed wherever they are needed:

Repositories created in ECR can be public or private, and images can be automatically or manually scanned for vulnerabilities and encrypted.

You pay for the data transferred, but a relatively large volume is free every month.

Fargate

Fargate is a very interesting AWS service that enables running "serverless" containers. This means that I don't have to figure out which server the container or containers will run on, but just define the required HW resources and OS. (Amazon Linux 2 or Windows 2019 is used as the OS.)

The service is charged based on CPU and RAM per hour, and in the case of Windows, there is also an OS license fee. Fargate cannot be used alone, but only in combination with ECS or EKS, where I select Fargate as the option to run the container.

ECS

V Elastic Container Service Amazon has realised its vision of what it would look like cloud service for running containers. If we don't have special requirements in terms of cluster management and don't mind some specificity of ECS, this is probably the easier way.

Cluster management (communication with agents on nodes, APIs, configuration storage) is handled by the ECS service itself, so we only need to configure it and don't have to worry about where it runs. The ECS cluster worker nodes are then implemented as EC2 instances or using Fargate.

ECS is provided free of charge, but there is a charge for additional AWS resources used (e.g. EC2).

ECC

Elastic Kubernetes Service is a service primarily for those who want to Kubernetes-compatible cloud service. They can therefore continue to use the usual tools, e.g. kubectl.

The Kubernetes control plane, which would be relatively complex to configure yourself, is available as a highly available AWS service.

Worker nodes can then be implemented using:

- EC2 (managed node groups or self-managed nodes)

- Fargate

ECS is paid per hour and cluster, again you need to pay for the AWS resources used (e.g. EC2).

Lambda

Lambda is a service that allows running serverless code in the cloud. It is usually used to call a simple event-specific function, but one of the ways to configure a lambda in AWS is to choose a container that will perform the desired function. For some more complicated things that require additional integration libraries (I can have those ready in the container), this may be the way to go.

In the case of Lambda, you pay for the time and RAM used, and the first million runs are free every month.

Elastic Beanstalk

Elastic Beanstalk is a managed service that enables the operation of web applications of various kinds, one of the variants is running an application created as a container.

Elastic Beanstalk is not charged, you pay for the resources used.

Red Hat OpenShift on AWS (ROSA)

If we want, we can in AWS cloud to run the complete Red Hat OpenShift platform. This is accomplished using Quick Start, which is an automatic deployment of all the necessary resources in AWS according to templates (CloudFormation). The installation will deploy three control plane nodes and a variable number of worker nodes.

Charges apply for used AWS resources (e.g. EC2) and a Red Hat OpenShift subscription is required.

Microsoft Azure

Microsoft Azure also offers a number of services that allow containers to run. Let's take a look at the registry ACR, Container Instances, AKS, App Function, App Service and again on the possibility of operating Red Hat OpenShift directly in Azure.

Azure Container Registry

Azure Container Registry enable, like AWS ECR storage and distribution of container images.

Azure Container Instances

Azure Container Instances allow running Linux and Windows containers no need to set up virtual serverssimilar to what AWS Fargate does. However, Azure Container Instances also allow you to deploy containers directly based on defined rules.

CPU and RAM are charged per unit of time, in the case of a Windows container the OS license is added.

AKS

Azure Kubernetes Service is a service providing Kubernetes clusters in Azuresimilar to AWS's EKS.

There is no charge for AKS clusters, only for additional Azure resources consumed.

Azure Functions

Azure Functions are similar to AWS Lambda and also allow running serverless code even in the form of a container. You pay for time and RAM used, each month a relatively large number of requests are free.

Azure App Service

App Service is a service enabling operation of web applications of various types, including containerised ones. The service is charged according to the allocated hardware resources.

Azure Red Hat OpenShift

Similar to AWS, you can deploy Azure complete managed OpenShift infrastructure, which is operated in the cloud by Microsoft in collaboration with Red Hat.

Containers in brief

Containers are a technology that has already reached a sufficient level of maturity and that has unquestionable benefits for the development and operation of applications - both in private datacentres and in the cloud. We have at our disposal many possibilities to operate containers efficiently, some of which we have introduced today.

The next logical step is serverless architecture, which you can read about here. But other articles from Cloud Encyclopedia.