Cloud backup: why, when, what and, most importantly, how?

Your data in the cloud is not automatically protected. Why, when, what and most importantly how to back up in the cloud?

Jakub Procházka

It's like paracetamol tablets with backup. You are calmer when you know you have them somewhere, and they can relieve you of unnecessary distress when needed. Just as with tablets we have to keep an eye on the expiry date, so with backups we check that they are done regularly and are able to actually recover the data from them at the required time. Let's take a look at what backups look like in cloud services.

Why back up?

We back up data (not only in the cloud) for many reasons and we usually decide how to back it up. The main goal is not to lose datathe company in the event of an unexpected event. Such events may be:

- Human error leading to data error

- Hardware, software failure

- Natural disasters

- Ransomware, malware and other viruses

- Intentional damage from the inside (inside job)

- Theft and lubrication

- Attacks by hackers or crackers

I'm deliberately putting human error here in the first place, because it's clearly the most common cause most major and minor data recovery reasons.

In the cloud we are not automatically more protected! Backup is not enabled by default and we have to secure data sources according to the same logic as in a normal datacenter.

Back up, back up, back up...

Backup is an important part of the operation of each company. However, each company approaches it slightly differently, as it has its own specific requirements based on technical, commercial, operational or legislative/policy aspects. It is therefore not possible to formulate any universal recommendation, but the overall context of the company and the specific data must be taken into account.

For example, the following requirements may affect the backup method:

- Certification standards (e.g. ISO)

- Legislative regulation (e.g. CNB regulation, GDPR)

- Limiting operation and maintenance costs

- Other technical and business requirements

As with most other major cloud disciplines, it is advisable to think and plan the target concept first. This activity may be called defining a backup strategy and is often part of a Business Continuity Plan (BCP).

But the topic of today's article is not BCP, data security or disaster recovery (DR), so I will try to focus on the backup itself.

Schrödinger backup

Very often I encounter that at the beginning the company sets up cloud backups, but then does not care much about them. At best, they set up notifications when a backup job is not performed. I call these backups Schrödinger's advances - Because until you actually try to restore from them on D-Day, you don't know if the backups are any good.

Although these situations can be thrilling or even exciting, I recommend avoiding them. Advances are supposed to be confidentlythat you can always count on - it's Plan B. Plan C, which is what happens in the event of missing advances, is usually not at all pleasant and difficult to explain not only to the management but also, for example, to an auditor who has come for an inspection by a regulator such as the CNB.

Even backups that seem fine at first glance may be corrupted in some way, infected (e.g. ransomware), or the backup job runs but the backup is null or Incomplete (tool error, wrong settings, configuration or rights change). Any backup of the backup is then also unusable, and the fact that it is logically and physically in another zone, for example, does not save us.

Therefore, in addition to notifications from the backup tool about the execution (or not) of a backup job, it is advisable to periodically backup Check and carry out test recovery. These tests should prove, among other things, that the solution meets the required RTO a RPO specified for the data content according to the agreed application service level.

- RTO - Recovery Time Objective - the ability to recover within a specified time

- RPO - Recovery Point Objective - the ability to recover to a specific point

A specific person (or group of persons) should be responsible for the backups and oversee compliance with the requirements arising from the company's strategy. This oversight should include verifying the recoverability of critical backups and related processes at regular intervals at least once every six months, once a year at most.

Backup (not only) in the cloud

In this article, we primarily focus on cloud backup, however backup principles remain the same regardless of whether it is pure cloud data backup (Azure, AWS, GCP), multi-cloud or hybrid cloud.

In all cases, claims should be Consistent. Backup needs should be clearly defined by the company and not limited by the specific environment. This is why most major backup software supports multiple environments and allows you to link different sources and targets for backups.

The integration is usually done either directly by a connector or the backup service provider offers its own variant of the cloud solution, for example in the form of an appliance.

What to back up in the cloud?

In the cloud, we divide backup into three basic categories: IaaS, PaaS, SaaS. PaaS and SaaS services usually have limited native backup capabilities from the provider, but can often be integrated with external tools (which is often desirable given the aforementioned limitations). In the case of IaaS, backups can usually be handled similarly to on-premise (classic virtual machine backup).

The fact that the data runs in the cloud, does not mean that they are automatically backed up! This common misconception also applies to SaaS. The data is always in the customer's control (see shared responsibility model v previous article series) and cloud providers leave the backup to him. The exception may be some SaaS services, where sometimes the provider performs a partial backup natively.

I always recommend SaaS solutions verify backup details and decide whether the solution is sufficient and whether I can rely at all on backups that are made by someone else (and thus not explicitly under my control).

For example, Microsoft SharePoint Online is only backed up every 12 hours with only 14 days retention (i.e. I only have data 14 days back). You can request these backups, but Microsoft has 30 days to restore them. Are you happy with this backup scheme?

In addition to the data itself, we must not forget about infrastructure and general configuration data. Ideally, we should have our cloud architecture defined using Infrastructure as a Code (IaaC) and keep these files backed up, updated and versioned. This way we are able to do not only data restoration, but complete restoration of the entire operating environment.

What tool to use?

Most customers are already entering the cloud with an existing on-premise solution. If an existing on-premise backup tool supports the public cloud, we can integrate it and use the cloud provider's native tools only for unsupported services (if any).

Native backup tools include Microsoft Cloud Azure Backup, Amazon offers AWS Backup. The most well-known third-party products that also support public clouds include:

- Veeam

- Commvault

- Unitrends

- Acronis

The functionality of each tool varies and should be reviewed more thoroughly. For startups or companies just getting started with the cloud, a cloud provider's native tool may be the easiest and most cost-effective choice.

How often to back up and where?

Once you have chosen a tool, it's time to decide how often to back up, where to store the backups, and how long to keep them (retention).

The frequency of advances is determined by data criticalityresulting from the application business analysis. We determine what RPOs we have and over what time period the company can afford to lack data. We now disregard the concept of high availability and possible active-passive and other settings for failover.

The golden rule says that we have back up on a 3-2-1 basisi.e. having three backups in two facilities and one in another location. This is also fulfilled in the cloud, taking into account availability zones and the archive layer.

For production backups, it's probably most common to perform daytime backups at night. That is, at the end of the day, a new data backup (usually incremental) and once a week a full backup.

Daily advances most often we keep 30-60 days to powerful storage - tier hot at Azure, standard at AWS. After this time, we only store Weekly a Monthly backups that we move to cheaper and less powerful tier storage using lifecycle management - cool and then archive in the case of Azure, Standard-IA, Glacier a Deep Archive at AWS.

Archive tier for Azure and Deep Archive for AWS are storage tiers on the level of proven tape cartridges. In this case, we are talking about archiving rather than backup as such. Data on the archive tier is stored very cheaply, but can take several days to restore.

Whether incremental or full backups are performed can be influenced in the selected backup software, and some tools may also support data deduplication. Unless we are backing up to our off-cloud disk arrays, we can only affect these functions at the software level and only deal with individual tiers (layers) at the storage level.

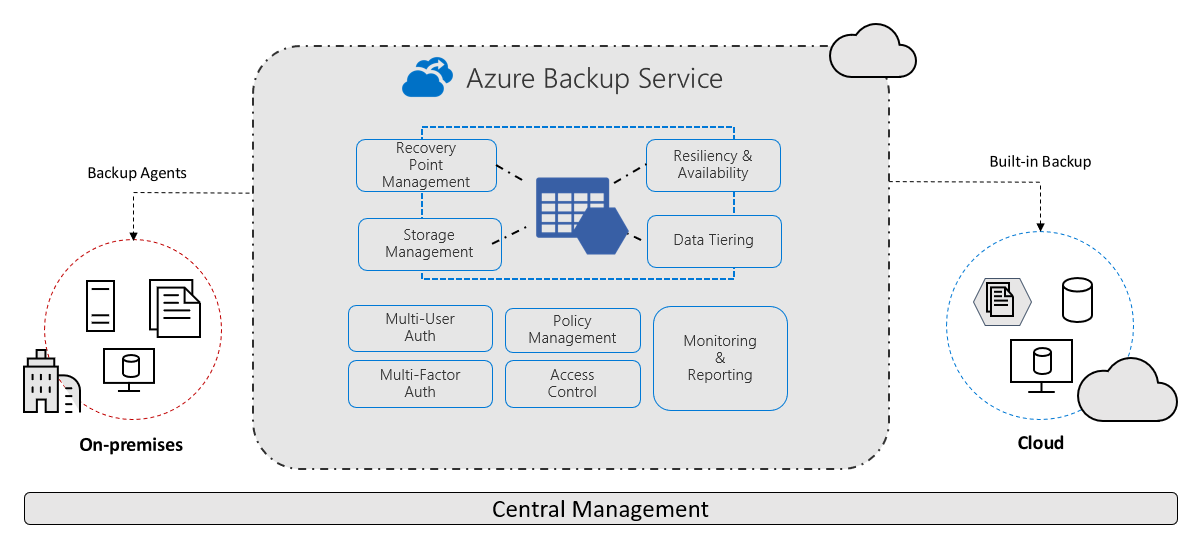

Backup in Azure

Azure is evolving very quickly, so services often change literally under customers' hands. At the same time, Microsoft (MS) likes to change the names of services and move their functionality between each other. Because of this, the overall backup solution can be stretched across multiple services with often interchangeable names, which makes an already poorly designed solution less clear.

MS is becoming aware of this, which is why it has been taking steps in recent months (written in the second half of 2021) to make the whole backup solution simpler and, above all, clearer.

In the area of backup, Azure was insufficient as an overall backup solution for more demanding clients. Now a new service is coming out that has the ambition to change that. It is Backup Centerto act as a single panel of glass for Azure backups. However, until it goes from preview to general availability (GA) it may contain bugs, has no SLA, and theoretically can be withdrawn from the offering.

For Azure backups, a critical component is Recovery Services vault. Official Microsoft documentation defines it as a storage entity in Azure that is used to store data. This data is typically data copies, configuration information for virtual machines (VMs), servers, workstations, and other workloads.

Using Vault Recovery Services it is possible to make backups for various Azure services such as IaaS VMs (both Linux and Windows) and Azure SQL databases. It makes it easier to organize the backed up data and reduces the management effort. Recovery Services vaults are based on ARM model (Azure Resource Manager), which provides functionalities such as:

- Advanced options for securing backed-up data

- Central monitoring for hybrid IT environments

- Azure RBAC (Role Based Access Control)

- Soft Delete

- Cross Region Restore

Simply put, it is a comprehensive service providing Backup, disaster recovery, migration tools and other functionalities directly in the cloud.

For migration is now cited as the primary tool Azure Migratewhich was previously used only for workload measurement and assesment. It now includes tools for migration itself, which was previously done separately via Azure Site recovery (ASR) v Vault Recovery Services.

Service Recovery Services vault is Microsoft's preferred option for Backup a disaster recoverywhich is built natively into Azure (sometimes also referred to generically as Azure Backup).

The main advantage Vault Recovery Services is the ability to back up on-premise workloads and perform failover both from on-premise to the cloud and from one region to another.

To Recovery Services vault it is possible to backup Azure, Azure Stack and OnPremise data.

A list of supported systems with support matrix is available here.

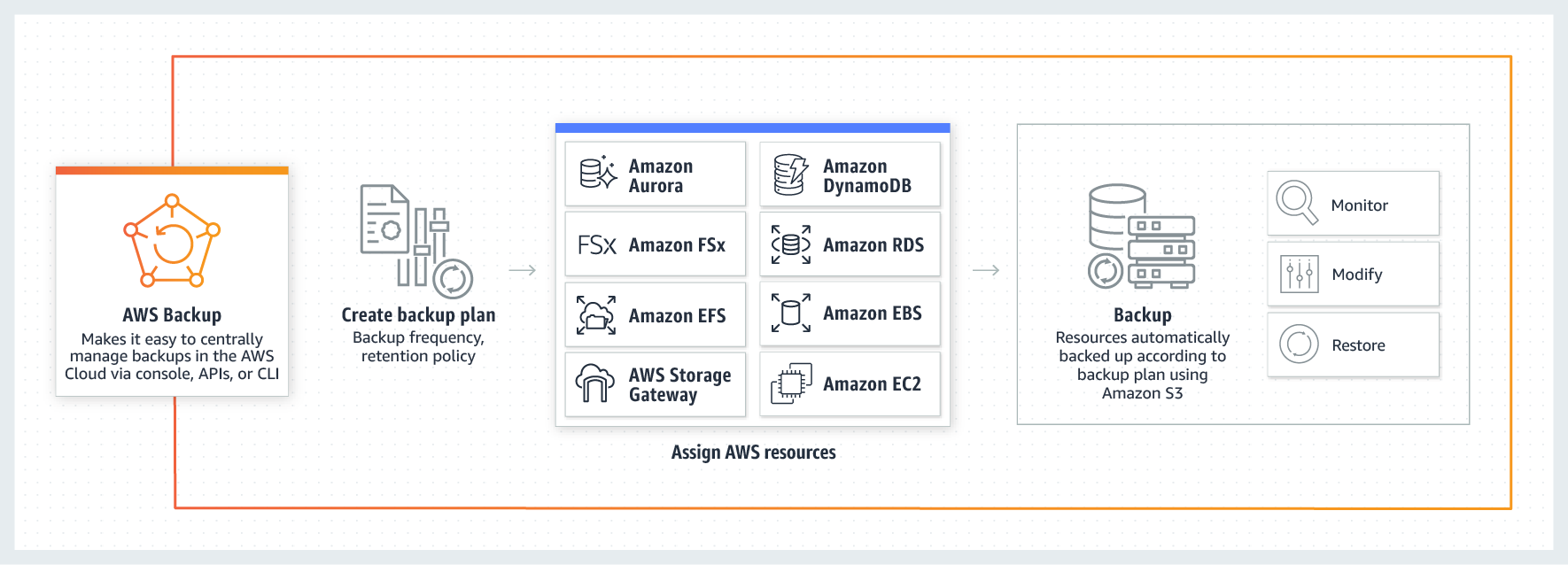

Backup in AWS

The native tool in AWS is called AWS Backup. The principle is similar to Microsoft's competitor, it also supports lifecycle management, workload backups running outside of AWS itself (e.g. on-premise) and many other functionalities such as:

- Centralized backup management

- Policy-based backup

- Tag-based backup

- Automated backup scheduling

- Automated retention management

- Backup activity monitoring

- AWS backup audit manager

- Lifecycle management policies

- Incremental backup

For on-premise data backup, you must deploy AWS storage gateway. Advanced automation tools are definitely one of the strong points of AWS Backup.

If you're only backing up to one provider in the cloud, I recommend going the native tool route. In the case of multi-cloud or hybrid cloud architecture, it is advisable to consider each tool and explore in more detail the combination that fits your needs.

Conclusion

Don't wait until you have a problem - whether it's human error or any other cause.

Run restore tests. The cloud is an amazing tool in that you can restore a large portion of your environment, verify the accuracy of the data, and delete the restored data for very little cost.

And then you can go back to sleep...

The previous 11 chapters of the Cloud Encyclopedia series you can find here.