Cloud Management 1: Don't patch, redeploy!

Cloud (whether public or private) can be described as a "software-defined datacenter", by which we mean that all elements of our infrastructure can be managed by software. But we can choose how we approach this. Cloud management can be done the old-fashioned way - manually, or the modern way - software and automation. The following mini-series of articles will be about the latter.

Petros Georgiadis

Manual cloud management or an uncomfortable iron shirt

Working manually (without automation) in an on-premise data center is a common uncomfortable iron shirt. After all, few people have their entire data centre "software-defined", whereas in the cloud it's like that from the start.

In the cloud, we can work the old-fashioned way - manually, slowly, with the risk of introducing human error and with lots of repetitive activities - we start on the dev environment, proceed through test, integration test, acceptance test, pre-production test (pre-prod), and finally arrive at the production environment (prod).

However, we often do not ensure that the pre-sale environments are identical to the production one. This means that we can't be sure that an operation, such as an operating system (OS) upgrade, won't introduce a change to the system that is incompatible with your application. Such a situation could result in application failure and subsequent rollback.

Another negative aspect of manual infrastructure management is considerable labour and time requirements.

Let me give you an example from a PCI DSS certified environment. The standard requires all operating systems to be provisioned at least once every three months. If you have 60 servers, updates must be done at night by two people (infra admin + application specialist - there must be a different application specialist for each application), which is already quite a lot of sleepless nights, stress and wasted money.

When I ask the manager responsible for running the infrastructure if he would like to automate these updates, the answer is that if it makes economic sense, then sure. But I always answer that the point of automation is not just to compare costs to create an automated environment with the cost of a human to oversee updates for the next five years.

The time of IT specialists should be devoted to development instead of laborious maintenance of the environment.

Moreover, IT professionals today choose what kind of work they want to do. And it's quite possible that in 5 years there will be no one who wants to update operating systems at night. So there's no need to wait and let's move on to the other way of working with the cloud.

Modernising cloud management

All cloud environments (AWS, Azure, GCP, OpenStack, Proxmox, Kubernetes, etc.) can be controlled programmatically using their API. The cloud GUIs are built on top of them, and any actions we can do through the GUI can be done directly through the API.

But not all IT professionals are such skilled programmers that they can call cloud APIs directly from their code. That's why there are a number of tools that enable this communication, such as AWS CLI, Azure CLI, Terraform, kubectl, etc. This is getting very close to the concept of Infrastructure as a Code (IaC), which we've already written about in our cloud encyclopedia.

Let's take a look at what modern cloud management looks like - that is, how we will manage the infrastructure created with IaC patch operating systems, platforms used, deploy new versions of applications. Our IT systems can be deployed to the cloud via 1) virtual servers (VMs), 2) containers or 3) serverless functions.

1) CLOUD MANAGEMENT & Virtual Servers

As the name implies, a virtual server is a server. And when you say server, the average IT person imagines something very expensive, large and complex that needs to be taken care of. This premise is indeed true for a physical server, but potentially harmful and limiting in the case of a virtual server.

The real value of VM is in its functionality and datawhich includes. Ideally, persistent data should not be stored directly on the VM, but on some external storage or database.

We should associate VM functionality with the application that performs this functionality rather than with the server itself. This implies that a VM is just something that we allows you to run the application and connect it to application data and the outside world.

Pets vs. cattle

So why are there virtual servers that have been running for many years, have a name, a static IP address (which everyone on the team knows well) and IT administrators sit up nights to keep them in good shape?

This is because completely (manually) installing, configuring and testing a virtual server is not a trivial matter. So once the job is done, it's easier to install upgrades and new versions of the app than to throw it all away and start all over again. So we take care of our virtual servers like pets.

Nor is it completely trivial creating and testing installation scripts. But once you've been through it once, the subsequent reusability is far greater than in the case of a manually installed VM.

So now that we have automated virtual server installations, we can "plant new VMs like Bata drills". We don't care if we create one, three or ten virtual servers. And we don't even have to think twice about what to do with an outdated VM - we just throw it away and replace it with a new one.

Golden image vs. bootstrapping

A virtual server is commonly starts from some image. Usually you start from a base image that contains a specific version of the operating system. Then the necessary platforms (e.g. Java) and applications are installed. The resulting virtual server can be saved as a new image, which is then used to start the VM. The fully installed image is called golden image.

In cloud environments, we also have the ability to automatically install the necessary platforms and applications as soon as the server starts using cloud init scripts. This is called server bootstrapping.

- The advantage of using golden image is a quick start of a new VM. The disadvantage is a more complex process of upgrading individual components and creating a new image. The prerequisite for effective use of the golden image is existence of CI/CD pipelinesthat automates the creation of new versions.

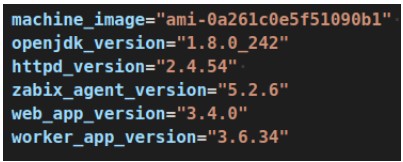

- If you don't have such a CI/CD pipeline, use the server instead bootstrapping. In this case, the server takes longer to start (on the order of minutes) and you must resist the temptation to update the operating system immediately after the boot (e.g. sudo yum update). Also, don't forget define specific versions of all componentsthat you install at startup (see picture).

It is good practice to maintain pre-pro and production environment identical. The installed versions of each component should differ only in their configuration - that is, the configuration should never be part of the image, but should be downloaded externally.

For example, you can take inspiration from how to build cloud apps at The Twelve Factor App.

Autoscaling

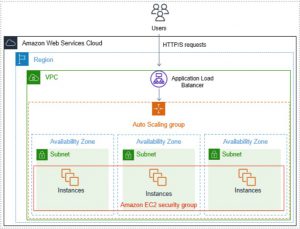

If we can easily create a new virtual server, how can we make sense of it? Cloud environments provide us with platforms (Platform as a Service - PaaS), which do a lot of things for us, which is the main reason why we are considering using the public cloud.

A virtual server you start in the public cloud will not be cheaper than one you run on your own physical server. On the other hand, the public cloud offers us opportunities that would be very difficult to achieve on our own - for example automatic start of our virtual server in one of the nine available datacentres within the same region.

To open the door to these opportunities, your app must meet two basic conditions:

- You must not store persistent data on the local disks of the virtual server.

- It must not matter to you whether a particular VM instance is running or not - i.e. VMs are interchangeable and you can add and remove them as needed.

Once both conditions are met, you can use e.g. auto scaling groupywhich provide the following benefits:

- monitor the minimum required number of healthy VMs,

- remove VMs that do not pass the health check,

- add and remove VMs according to their current utilization based on various metrics (e.g. CPU usage, RAM usage, number of requests directed to VMs),

- automatically register/deregister VM instances to the load balancer,

- in the event of a datacenter failure, the missing VMs are started in the remaining available datacenters,

- ensures that new versions are deployed without system failures (in conjunction with the load balancer).

2) CLOUD MANAGEMENT & Containers

Containers are a much younger technology than server virtualization, and thus much better suited to today's automated age.

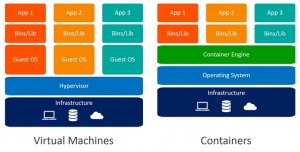

The following figure shows that each virtual server contains its own operating system, libraries, and applications, whereas container contains only libraries and application. (This makes a typical VM image tens of GB in size, whereas a typical container image is only hundreds of MB.)

Fortunately, the concept of container technology warns users that the lifetime of a container can be in the order of minutes. Therefore, there is no point in plugging in and doing some manual intervention.

I would almost say that the idea of VM instance expendability came from the world of containers, and it's an example of how newer technology has helped modernize the previous one (kind of like when snowboards inspired the creation of carving skis and today we use both).

Container image

Just as there are VM images, there are container images. But with one major difference: container image should always be a golden imagei.e. it should contain all the necessary libraries and versions of the application. (Purely theoretically, we could install something after the container starts, but this idea seems "vulgar" to me, so I won't develop it further.)

Containers should start very quickly (within seconds), which you can only achieve with a ready-made image. Then upload it to a repository (e.g. Nexus a jFrog in an on-premise environment, or Docker Hub, AWS ECR, Azure CR in cloud services), from where you can easily distribute your application and its different versions.

Container management platforms

We have to run the containers on some engine container. Docker Engine or Docker Compose are only suitable for local development and testing, but not for running the application.

The container is used for production deployment:

- open source Kubernetes (k8s), which you can run on-premise, in the cloud or buy as a service (PaaS) from a range of different providers,

- proprietary solutions from public cloud providers (e.g. AWS ECS, Azure Service Fabric, Google Cloud Run and others), which are usually very well integrated with other services of the cloud.

If you decide to run a container platform yourself, you will most likely do it on virtual servers. Therefore, keep in mind that you should automate the actual deployment of this platform, as we described in Virtual servers.

3) CLOUD MANAGEMENT & Serverless features

Serverless function is basically code in a specific programming language + necessary libraries of that language. These features are mainly associated with public cloud providers (e.g. AWS Lambda, Azure Functions, GCP Functions), but we can also find kubernetes projects that allow us to run serverless functions in our k8s cluster (e.g. kubeless, knativ or fn).

What is worth noting is that the libraries we use in our functions may contain vulnerabilities that we should know about and should be able to fix without much effort by upgrading the library to a higher version.

Now that I've mentioned vulnerabilities in libraries, I'm sure a few more lines could be written about vulnerability scanning, how to deploy patches using the CI/CD pipeline, how to work with IaaC scripts, and how to tie it all together into one end to end process. Modern cloud management is a big topic, so we'll save some for next time.

Good advice in conclusion: don't patch, redeploy!

The procedures I describe in this article have been proven over many years and by thousands of companies around the world. You will find a number of detailed technical articles and tutorials on the subject. So, in short:

- Don't be afraid of change - we're not doctors, so if we make a mistake, nobody dies. (The advice is only for those who do not operate vital IT systems.)

- Don't dig your fields with a spade, get a tractor!

- Have big plans, but feel free to start with small steps.

- If you don't know what to do, please contact us. We are happy to help with topics other than cloud management.