Compute-intensive and legacy applications require a specific approach before migrating to the cloud. Why is it worth it?

When planning a migration to the cloud, you sometimes encounter applications that will not perform satisfactorily on commonly used compute resource plans (vm sizes, instances, ...). These are usually applications with an earlier date of creation (legacy), applications that are not optimized, or on the contrary with code that is optimized - but for the existing on-premise environment. How to solve such problems?

Tomas Krčma

High power consumption and cooling of fast processors

For legacy single-threaded applications it is not rare requirement for the highest possible processor clock speed. However, you will usually encounter this in large cloud provider datacentres. In fact, the high power and cooling requirements of processors clocked at over 3 GHz don't sit well with high-density datacenter environments.

CPUs with high clock speeds are therefore not energy efficient. Even a datacenter architecture that runs on fewer, albeit very powerful, CPU cores will not be advantageous for typical operation in the form of a concurrency of many less demanding applications.

Thus, the result of migrating a more demanding legacy application to the normal cloud computing resource plans can be at least application slowdownand the resulting economic losses a dissatisfaction users and management of the organisation.

How to migrate legacy and compute-intensive applications to the cloud

To avoid these scenarios, the key (as always) is quality preparation of the entire migration. After we identify legacy and other problem applications, we can prepare for cloud operation, or prepare the cloud for their operation:

- modify the application architecture (or transfer the necessary functionality and data to another application),

- choose one of the special computing plans (or use dedicated hardware).

Modifying the application architecture

The architecture of the application can be influenced at the configuration level or in the code itself.

Modifying an application at the configuration level

We have to make do with this method especially for "ready-made" applications. More modern multi-tier applications, which are usually ready for performance scaling, have an advantage.

We replaced the "super-performance" server from the on-premise environment processing the requests of the web application of the ERP system with two servers in the cloud using a load-balancer, and we reserved the third server for serving requests from outside the network. The side benefits are increased redundancy of the solution, scalability for the future and protection of the organization's internal operations in case of a cyber attack.

Changes in the application code itself

This way of influencing the architecture of the application is usually more complicated than reconfiguration, if it is possible at all. Usually we are not allowed to interfere with the "finished" applications, but we can work with the software manufacturer on a solution.

For the DMS solution aimed at the public sector, the switch to the Linux version helped, as it was able to work better with parallel processing of tasks in modern processors. In addition, access via mobile devices, which was not possible until then, was a benefit.

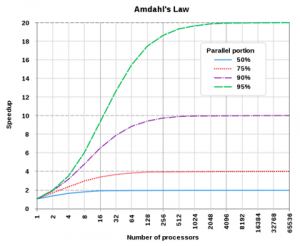

In "custom" line-of-business applications, the possibilities are wider. We have the code available and can modify it. A common way is to try to parallelize the program code. This is a challenging discipline, where complicated errors can occur due to the difficulty of managing the concurrency of application threads.

The third way

But it is not always necessary to resort to costly development. In addition to their own logic, applications usually rely on a variety of finished librarieswhose newer versions often contain parallelized code and which we can only deploy after proper testing.

It's a good idea to monitor other computing resources as well, so that the bottleneck doesn't become, for example, memory bandwidth or the disk subsystem, whose performance can be influenced in the cloud by choosing the right tier.

An "extreme" application modification can be conversion to code that can be efficiently executed on the GPU instead of CPUs, i.e. on the processors of graphics cards. This is already a rather narrow solution. However, large cloud providers have computing plans that include the rental of AMD or nVidia graphics card power. This approach is suitable for massively parallel operations, machine learning and AI.

The radical cut then is the "problematic" (usually legacy) exit the application. It can be replaced, for example, by integrating the functionality of another application that has not been used so far, which will also consolidate the organisation's information system. Another good inspiration can be an offer on the marketplace of a cloud provider.

We have repeatedly succeeded in transferring various personnel agendas, equipment loan records or management of simpler projects to M365 applications. This has eliminated a number of "small systems" with problematic database access solutions (popular loading records one by one), working with a single list and user groups in Azure AD. It has also usually simplified access from different device types.

Special compute plans for legacy and demanding applications

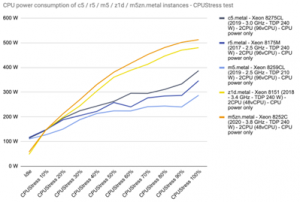

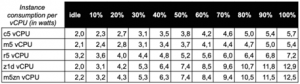

Cloud providers are reluctant to deploy high-performance hardware in their datacentres, or they pay well for it (for the reasons mentioned above).

Even if you can't optimize (especially) a legacy application, there are still ways to run it successfully in the cloud. There are ways to do this special calculation plans focused on applications requiring high processor performance, above-standard memory, etc.

Azure

In an Azure environment, in terms of processor performance, it is virtual machine sizes:

- Fsv2-series - with Intel Xeon processors clocked at 3.4 to 3.7 GHz,

- FX-series - with Intel Xeon processors clocked at 4 GHz.

These two and many other Azure plans have interesting features that can be explored for example in this document.

AWS

In the AWS environment, in terms of processor performance, it is EC2 instance types:

- C7g, C6g, C6gn - with AWS Graviton ARM processors,

- C6i, C6a, Hpc6a - with third-generation Intel Xeon Scalable and AMD EPYC processors clocked at 3.5 to 3.6 GHz,

- C5, C5a, C5n - older generation Intel Xeon and AMD EPYC processors with an average clock speed of 3.5 GHz,

- C4 - with an Intel Xeon E5-2666 clocked at 2.9 GHz.

Details of the wide range of AWS plans can be get acquainted, for example, here.

However, after calculating the longer-term costs of running applications in computationally intensive plans, we can conclude that the costs have increased compared to running in on-premise will disproportionately increase.

Global datacenter networks of large cloud providers are divided into regions (see Previous article Cloud Encyclopedia) and not all plans are available everywhere. This can complicate the connection to other parts of the organization's information system, or the application may not be able to be started after downtime (due to the lack of these "scarcer" resources in the cloud).

It is then appropriate to consider one of the options for securing dedicated hardwarewhich can be tailor-made by the provider. This way, we use the high-quality infrastructure of a large datacenter, where we have an optimal computing solution for our tasks that is dedicated to them.

Redundancy can be addressed by using shared cloud resources if the limited performance is acceptable during a dedicated hardware outage.

Summary: Even legacy and computationally intensive applications can be migrated

Computationally intensive plans are among the more expensive ones in the cloud. The cost of deploying them calculate in advance - either with the help of cloud calculators, but preferably with the advice of an experienced migration partner.

The expected cost of operation tells you how much money it makes sense to spend on optimising or changing your application to run it more cost-effectively. Or whether, especially if you're running a higher volume of demanding calculations on a regular basis, you should try a dedicated solution.

When choosing the final solution, as with all IT projects, we should not forget to look at security, availability and sustainability.