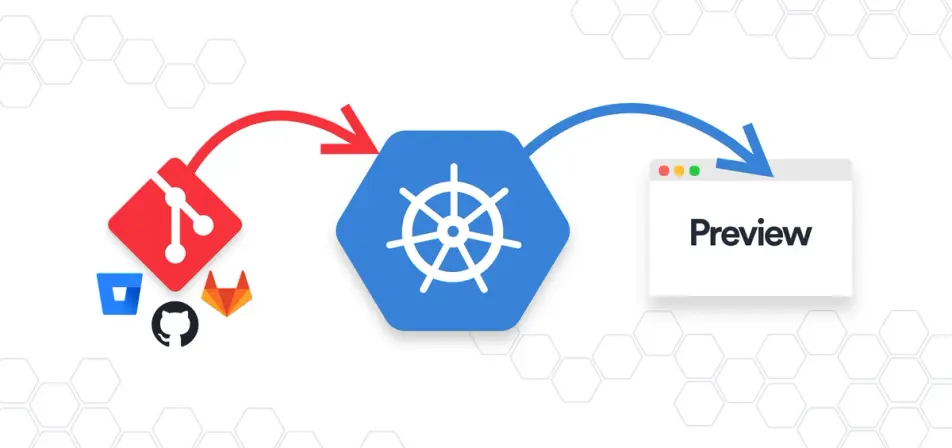

Cloud-native approach to application deployment in Kubernetes

"Application deployment in Kubernetes is often the bogeyman. Which approach to choose? What works best for developers and provides enough control? Let's explore the options for cloud-native approaches to application deployment in Kubernetes under public cloud providers."

Adam Felner

(Source: https://www.okteto.com/blog/preview-environments-for-kubernetes/)

What is Kubernetes?

Kubernetes is an open-source platform for container orchestrationthat automates the deployment, scaling and management of containerised applications. Over the past 10 years, Kubernetes has become the clear choice for running containerized applications, thanks to its orchestration, robust and flexible platform for easily scalable systems.

Kubernetes is available from mainstream public cloud providers such as PaaS, but it can also be managed on VMs in IaaS (similar to on-premise environments) - however, we recommend using IaaS only in very specific scenarios. We took a closer look at Kubernetes in the previousthe following article.

Cloud adoption has also contributed to this for cloud-native practices by adhering to the core principles of cloud computing - scalability, resilience and microservice architecture, which underscores the use of Kubernetes to get applications up and running quickly and efficiently.

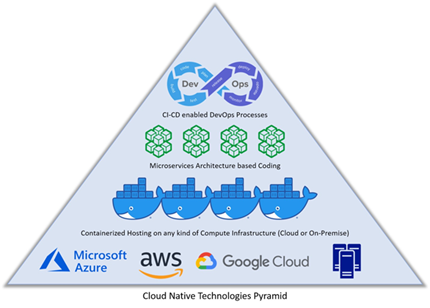

The principle of cloud-native computing with containers

(Source: https://www.ridge.co/blog/cloud-native-applications-explained/)

Principles cloud-native computing

Cloud-native computing is a set of practices designed to take full advantage of models cloud computingu. These practices include:

- Microservices architecture: Split applications into small, independent services that can be deployed and scaled individually.

- Continuous delivery: Automation of the release process to enable frequent and reliable application updates.

- Resilience: Designing applications to withstand failures and recover quickly.

- Scalability: Ensuring that applications can scale up or down based on demand.

These principles are more fully introduced in the articles Why create cloud native applications? In the cloud, nothing else even makes sensel, Kontainers in the clouds - how to use them in the cloud? a Deployment pipeline: let's go for it in the cloud!

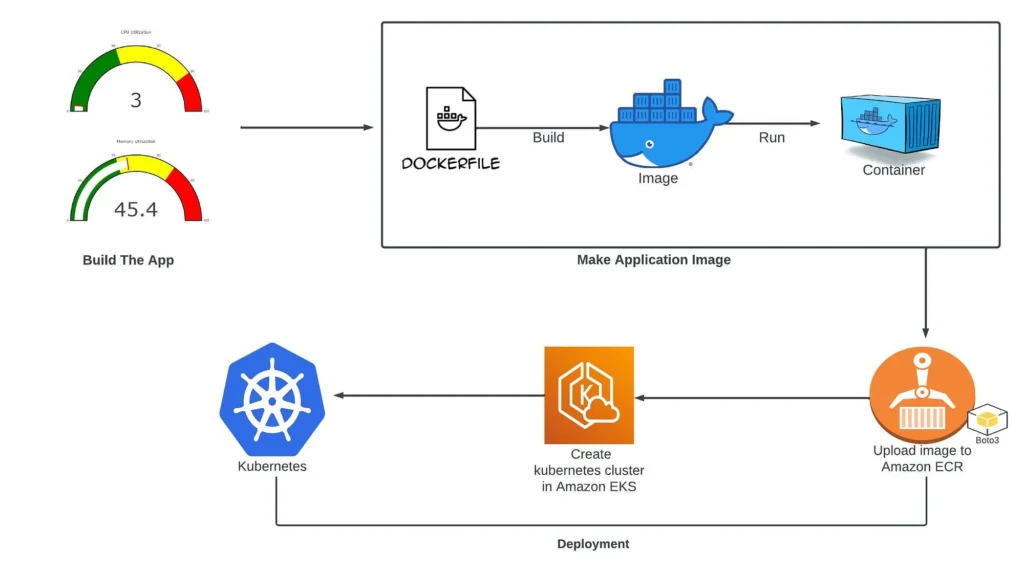

Sample use of containers in the cloud

(source: https://aws.plainenglish.io/deploy-cloud-native-monitoring-app-on-kubernetes-42ca974cd47c)

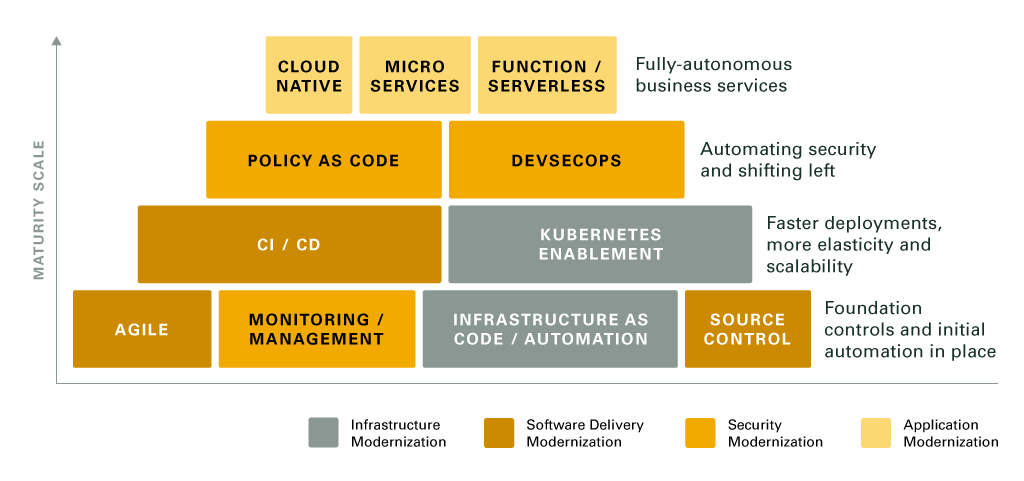

Cloud-native maturity levels

(Source: https://www.burwood.com/devops-adoption )

Deployment in Kubernetes: what are our options?

Application deployment in Kubernetes is one of the key functionalities of this platform, which enables efficient management of applications in a containerized environment. Kubernetes offers multiple ways to deploy and keep applications running, ensuring high availability, fault tolerance, and the ability to scale dynamically.

1. Manual deployment in Kubernetes using Kubectl

Kubectl is a command-line tool for interacting with the Kubernetes API server. It allows users to deploy applications by directly applying configuration files, making it a straightforward option for resource management in Kubernetes.

Benefits:

- Simplicity and direct control over Kubernetes resources

- Flexibility in manual configuration of resources

Disadvantages:

- It can become complex and error-prone with larger deployments.

- There are no built-in mechanisms for versioning or templating.

Best practices:

- Use version control systems to manage your YAML configuration files.

- Validate your configuration files before deployment with tools like Kubeval or Kustomize.

- Automate repetitive tasks using scripts or CI/CD pipelines.

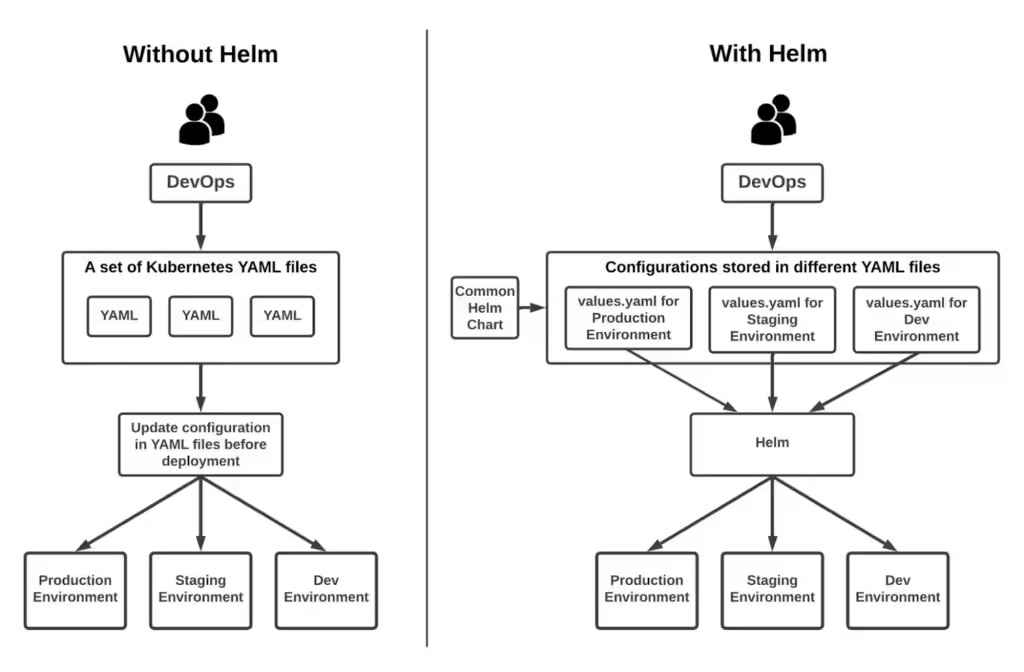

2. Deployment in Kubernetes using Helm Charts

Helm is a package manager for Kubernetes that simplifies application deployment by packaging resources into charts. Helm chart is a collection of files that describe a related set of Kubernetes resources.

Helm chart typically includes:

- chart.yaml: charter metadata,

- values.yaml: default configuration values,

- templates: templated Kubernetes manifests that are rendered using values.yaml file.

Benefits:

- Simplifies deployment and management of complex applications.

- Supports templating and dynamic resource configuration.

Disadvantages:

- It can bring complexity for small or simple deployments of small objects.

- Requires knowledge of Helm-specific templating and syntax.

Best practices:

- Use Helm to package and distribute your apps for easy deployment and management throughout the app lifecycle.

- Take advantage of templating features Helmet to customize deployments based on environment-specific requirements.

Follow best practices for versioning and release management to maintain consistency and reliability.

Deployment using Helm Charts

(Source: https://circleci.com/blog/what-is-helm/)

3. Kustomize for configuration management

Kustomize is a configuration management tool that allows users to customize Kubernetes manifests without modifying the original files. It uses the concept of overlays and patches to apply changes dynamically.

Kustomize features:

- Overlays: define the changes to be applied to the base configurations.

- Patches: specify modifications to existing manifests using JSON or YAML patches.

Benefits:

- Allows easy customization of resources without duplicating configurations.

- Integrates well with Kubectl, eliminating the need for additional tools.

Disadvantages:

- Requires an understanding of syntax and patching mechanisms Kustomize.

- It doesn't have to be as feature-rich as Helm for complex deployments.

Best practices:

- Use Kustomize to manage environment-specific configurations and overlays.

- Organize your configurations into bases and overlays to facilitate reuse and sustainability.

- Validate your customizations with Kustomize build before applying them to the cluster.

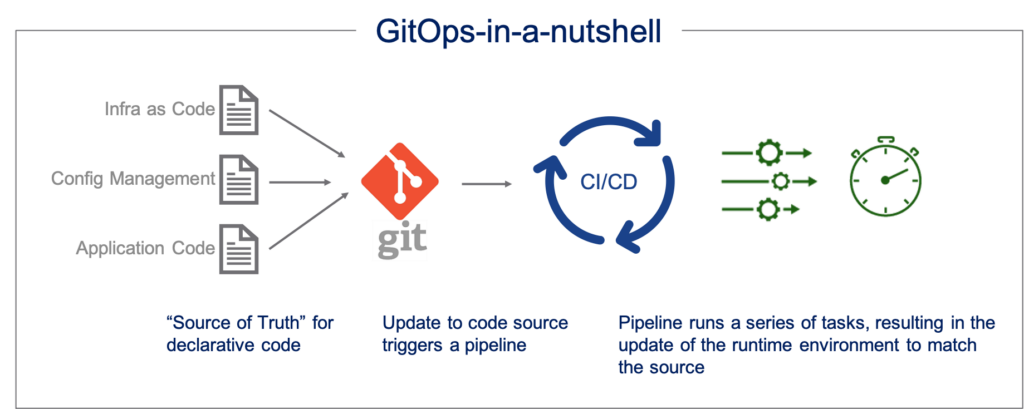

4. GitOps approach with Argo CD and Flux

GitOps is a methodology that used Git as the single source of truth for declarative infrastructure and application deployment. Changes to the infrastructure are made via pull requests and are automatically applied to the cluster using the GitOps tool. We cover this topic in detail in the article Cloud Management 3: Move to GitOps - a modern approach to managing and operating applications and infrastructure.

GitOps workflow

(Source: https://www.spiceworks.com/tech/devops/articles/what-is-gitops/)

Overview of Argo CD and Flux:

- Argo CD: A declarative GitOps continuous delivery tool in Kubernetes that monitors Git repositories for changes and syncs them with the cluster.

- Flux: A suite of continuous and incremental delivery solutions for Kubernetes that automate resource deployment based on Git commits.

Benefits:

- Provides a clear audit trail of changes via Git history.

- Enables collaboration and peer review of infrastructure changes.

Disadvantages:

- Requires integration with CI/CD pipeline and Git repositories.

- It can bring complexity in managing GitOps configurations and repositories.

Best practices:

- Organize your Kubernetes manifests and configurations in Git repositories, following a clear structure.

- Use Git branches and pull requests to manage changes and enable peer review.

- Integrate GitOps tools like Argo CD or Flux with your CI/CD pipeline for automated deployments.

5. Serverless deployments with Knative

Knative is a Kubernetes-based platform that extends Kubernetes capabilities to support serverless workloads. It provides the building blocks for deploying and managing serverless applications:

- Knative Serving: Manages the deployment and scaling of serverless applications.

- Knative Eventing: It provides a framework for consumption and event production.

Knative workflow diagram showcase

(Source: https://knative.dev/docs/community/)

Benefits:

- Simplifies deployment and scaling of serverless applications.

- Reduces infrastructure management overhead by abstracting serverless workloads.

Disadvantages:

- Adds complexity for non-serverless applications.

- Requires an understanding of Knative components and configurations.

Best practices:

- Use Knative to deploy microservices and event-driven applications that benefit from serverless architectures.

- Take advantage of Knative's autoscaling and event-driven functionality to optimize resource utilization.

- Monitor and manage Knative components to ensure optimal performance and reliability.

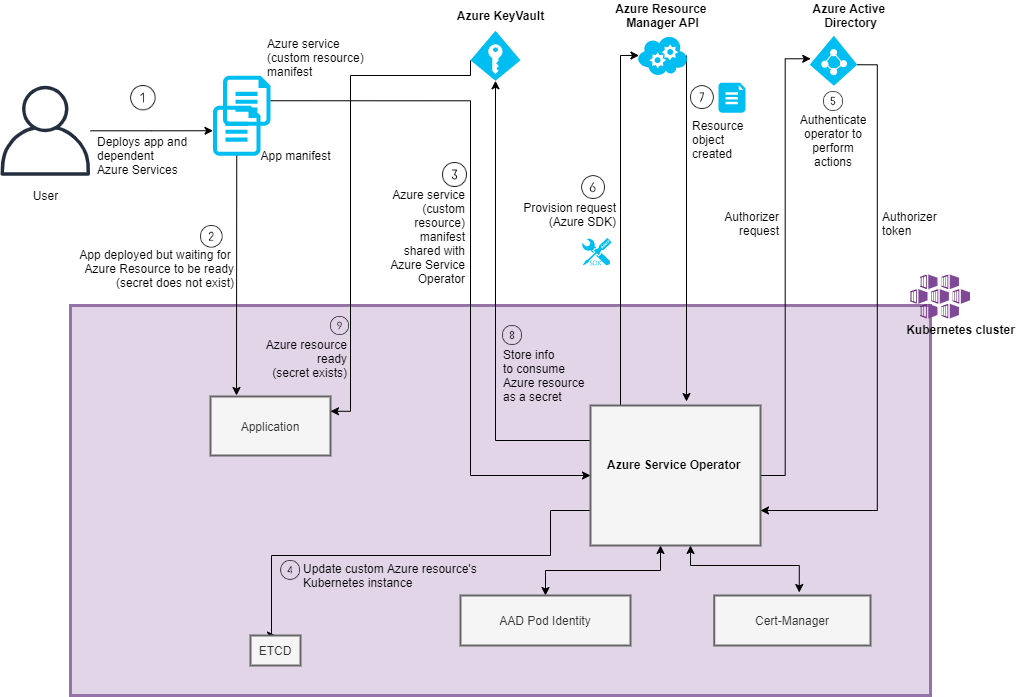

6. Deployment in Kubernetes based on operators

Kubernetes operators are custom controllers that extend the capabilities of Kubernetes to manage complex applications. Operators automate the deployment, scaling and management of application-specific resources. Operators are developed using the Kubernetes Operator SDK, which provides tools and libraries for building custom controllers.

Workflow Operators in Azure

(Source: https://devblogs.microsoft.com/ise/azure-service-operators-a-kubernetes-native-way-of-deploying-azure-resources/)

Benefits:

- Automates complex application lifecycle tasks reducing the need for manual intervention.

- Extends Kubernetes capabilities to manage application-specific resources.

Disadvantages:

- Requires the development and maintenance of custom controllers.

- Adds complexity to the Kubernetes cluster.

Best practices:

- Use operators to automate the management of complex stateful applications and databases.

- Follow best practices for operator development and maintenance, including testing and versioning.

- Monitor and manage operator deployments to ensure reliability and performance.

Choosing the right deployment option

When choosing a deployment option for your Kubernetes application, consider the following factors:

- complexity of the application - the complexity of your application and its dependencies

- team expertise - Experience and skills of your team in managing Kubernetes deployments

- operational requirements - the operational needs of your application, including scalability, resilience and monitoring

Comparison table

| Possibility of deployment | Benefits | Disadvantages | Use cases |

|---|---|---|---|

| Manual deployment | Simplicity, direct control | Complexity for large deployments, lack of versioning | Small, simple deployments |

| Helm Charts | Simplifies complex deployments, supports templating | Brings complexity, requires knowledge of Helm | Complex, dynamic environments |

| Kustomize | Easy customization, no duplication of configurations | Requires an understanding of patch syntax | Environment-specific adaptations |

| GitOps (Argo CD, Flux) | Clear audit trail, collaboration via Git | Complexity of integration, requires knowledge of GitOps | Automated, collaborative deployments |

| Knative | Simplifies serverless deployments, reduces overhead | Complexity for non-serverless applications, knowledge of Knative | Serverless applications, event-driven applications |

| Operator-based deployments | Automates complex tasks, extends Kubernetes capabilities | Development and maintenance of custom controllers | Complex state applications, databases |

Integrating cloud-native practices in Kubernetes deployments

Automation and CI/CD pipeline

Automating your deployment processes is key to achieving consistency and reliability in your Kubernetes deployments. Integrate CI/CD pipelinee with your Kubernetes cluster to automate the build, test and deployment phases of your application lifecycle.

(zSources: https://www.seaflux.tech/blogs/ultimate-guide-kubernetes-deployment)

Tracing and monitoring

Implementing tracing and monitoring in your Kubernetes environment is essential to maintaining the health and performance of your applications. Use tools like Prometheus, Grafana a Elasticsearch to monitor key metrics and gain insight into your application's behavior.

Security and compliance

Security and compliance are critical aspects in any Kubernetes deployment. Follow best practices for securing your clusterincluding:

- implementation of network policies and access control,

- Usage Key Valtov, Password Managers a Cert Storov for sensitive data,

- regular updates and patching of your Kubernetes components.

Deployment in Kubernetes in a nutshell

Kubernetes offers at any cloud provider a wide range of deployment optionseach with its own set of benefits and trade-offs. By understanding the options available and following cloud-native best practices, you can optimize your application deployments and achieve greater efficiency, scalability and reliability.

For further learning and exploring the possibilities of Kubernetes and cloud-native deployment, we recommend the following resources:

- Kubernetes documentation: Kubernetes.io

- Helm documentation: Helm.sh

- Kustomize documentation: Kustomize.io

- Argo CD documentation: ArgoCD.io

- Flux documentation: FluxCD.io

- Knative documentation: Knative.dev

- Kubernetes Operator SDK: Operator Framework