Network architecture or untangling cloud networks

Enterprise network architecture in the cloud can be complex. How not to get entangled in cloud networks?

Jakub Procházka

The network architecture in the cloud can vary in complexity, depending on the services and needs of the company - operational, security, legislative and others. The important thing is not to get too entangled in these networks, which I hope this article will help you to do.

Virtual networks

The elementary network element in the cloud is the virtual network. In Azure, it is abbreviated as VNet, in AWS again VPC (virtual private cloud). This the cornerstone of network infrastructure, as the name suggests, is the virtual equivalent of a traditional network connecting the systems connected to it (similar to what we know from on-premise environments).

In some respects, the virtual network is slightly different from the on-premise network, but overall its creation and management greatly facilitated. There is no longer a need to run to the datacenter and connect cabling, or complicated configuration of switches and routers. We are able to do everything directly from the portal or from the CLI (command line), literally instantly.

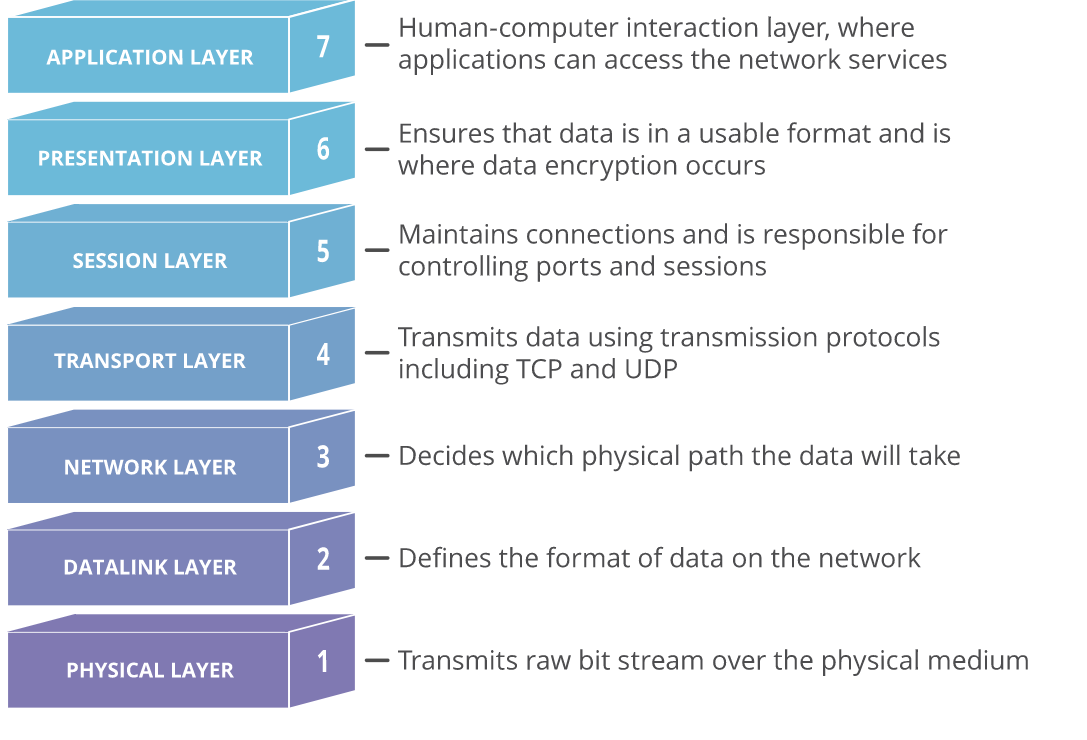

In the case of virtual networks, we move at layer 3 (L3) of the ISO/OSI model. This means that we cannot, for example, use VLANs that are at the second (L2) layer. In the cloud we work from L3 upwards (with the need to use IP protocol).

Throughout this article, I will be describing technologies working at different layers, so it is useful to recall the OSI model in the figure below.

IP addresses in virtual networks

Virtual networks shape Isolateda secure, highly available private network inside the cloud. Like any internal network, it must be assigned a private address range, specifically an IPv4 CIDR block.

It's good to think that always we will lose five IP addresses from the range - compared to the two IP addresses (three including the gateway) we are used to from on-premise. In addition to the traditional network address, broadcast and gateway, AWS and Azure always reserve two additional IP addresses for DNS and "future use". The smallest supported network is with the prefix /29 (we get three free IPs) and the largest is /8 (16,777,211 free IPs).

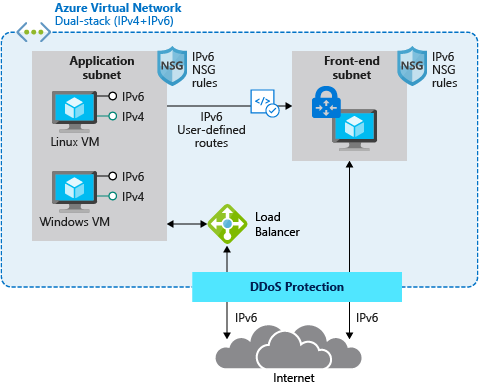

Both of these providers also support IPv6, or dual stack (IPv4/IPv6). Dual stack virtual networks allow applications to connect over both IPv4 and IPv6 to resources within the network or to the Internet. For both providers, the IPv6 range must also be exactly /64.

These are private address ranges, so we don't have to save them. Even so, it is still advisable to plan and think about the overall architecture in advance. This is done in preparation for the so-called. landing zone.

Although virtual networks are isolated elements, we should not overlap address ranges (this also applies to addresses located

on-premise). This is mainly for future planning. The moment we would interconnect the same address ranges with each other, erratic behavior would occur, up to a total outage.

Naming conventions and network segmentation

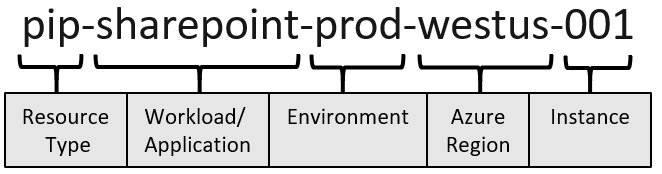

For virtual networks, it is always a good idea to define naming convention and then abide by it. The general recommendation is to specify the source type, application name, environment, region, and the order itself. For example, a VNet in Azure might be named vnet-shared-eastus2-001.

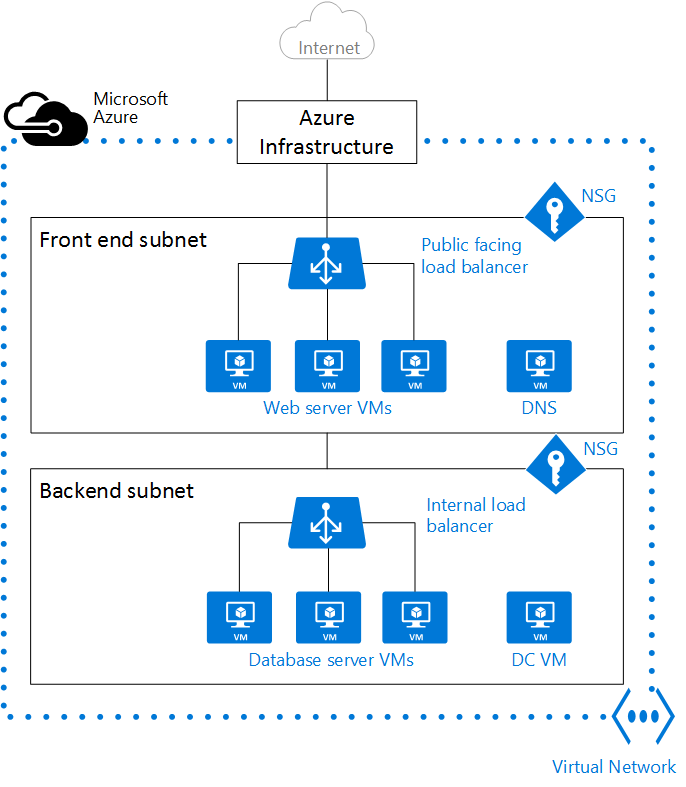

The address range must always contain subnetsat least one. Of course, this is usually not the optimal solution. We apply similar logic to network segmentation as we know from on-premise. This is usually defined by the network team together with the security team.

We just have to remember that we don't use VLANs. Typically, we have at least a frontend and a backend subnet for an application. We can also have our own subnet for storage, AKS and more.

The golden rule is leave a sufficient margin when addressing, as re-addressing can be problematic. We'd rather assign more addresses and not use them than assign fewer addresses, thus causing problems in the future. If you're not sure, you usually won't make a mistake with the /16 address range and the /24 subnets.

Less is sometimes more

Virtual networks are free - it doesn't matter how many of them there are, the address range, or the number of subnets. However, the recommendation is not to overdo it with them, because more networks are harder to manage and we would make the overall environment more cluttered or complicated.

In general, it can be recommended to divide VNet/VPC, for example, by region, environment, department or application. If resources are to communicate with each other, they can be on the same network and avoid unnecessary peering.

Isolation and security can be controlled to some extent by using security groups, which I will describe in more detail in the next section of this article. If, on the other hand, different teams are managing the applications and need to configure the network, it may be better to split them up.

Limits on the number of virtual networks vary considerably between providers: Azure has a limit of 1000 virtual networks per subscription and 3000 subnets per VNet. AWS has a default limit of 5 VPCs per region (can be increased to 100) and 200 subnets per VPC.

Network design

For both providers, virtual networks are region dependent. This means that it is not possible to stretch a single network across multiple regions. The resources connected to the VNet/VPC must always be in the same region. Exceptions are some global services like CDNthat do not have a defined region, or only for metadata.

If we want to communicate with sources outside of the region/VNet, we can set the peering. Peering itself is free, but you pay for the data that flows through it.

Network and PaaS integration

With a few exceptions, most services need the virtual networks they connect to in order to operate. PaaS services overwhelmingly also support integration with VNet/VPC, but some are able to work without VNet/VPC connection.

In this case, they connect to an internal virtual network, which is not visible to the user in the portal and cannot be managed in any way. Depending on the specific PaaS services, additional functionalities such as firewall, dns, etc. may be available. Communication then takes place over the cloud provider's backbone network, or over the Internet, allowing the service to, for example, access the Internet, connect a public IP address and protect against DDoS. An example of such a service is App service/Elastic Beanstalkwhere users connect only from the internet.

However, services not connected to the customer network are rather rare cases and in many of them I would still consider communication within VNet for security reasons. This can be achieved by using service and private endpoints, which I will write about in more detail below.

Integration of cloud networks and on-premise environments

To connect with on-premise we consider either Site-to-site VPN or, at best, about Express route/Direct connect (or a combination of both).

I don't need to introduce S2S VPN as an encrypted internet tunnel, Express route (Azure) and Direct connect (AWS) are services for creating private dedicated lines between the on-premise datacenter and the provider's datacenter. These links are more reliable, faster and provide lower latency than Internet communications.

If the traffic is via Express route/Direct connect, the service price list applies. Otherwise, you pay classically for data traffic from the cloud, called egress. There is no charge for ingress (uploading data to Azure), as mentioned above. in my previous article.

What about network and subnet isolation?

Thanks to the isolation property, VNets/VPCs cannot see each other in the default state - the sources together they don't communicateor communicate less safely outside VNet (Internet, internal Azure network).

This can be "bypassed" if necessary by setting the aforementioned peering between VNets. The peering traffic is not free, both egress from the outgoing VNet and ingress to the destination VNet are charged. Prices also vary depending on whether the peering is within or between regions. Intra-regional peering is cheaper, but even in the case of global peering it is not a staggering amount of money (however, it is good to keep this in mind).

Subnets are used to divide the address range into multiple blocks for better visibility, segmentation, and security. Unlike VNet/VPC, communication between subnets within a single virtual network is not not restricted by default. Resources are not isolated by subnets and can communicate with each other. This communication can be limited by security group.

Basic network firewalling

When it comes to the first filtering and restricting traffic, we start talking about security groups. These are called AWS Security groups in AWS and Azure Network security groups (NSG). These are basic firewalls that can be attached to an entire subnet or to a specific VM/EC2 or their network interface card (NIC). In simple terms, these are list of rules for outgoing and incoming traffic. These rules have different priorities, according to which they are then evaluated (similar principle to iptables).

To give you an idea: in Azure, a security rule must always contain source, port range, destination, protocol (any/tcp/udp/icmp), action (deny/allow) and priority. The resource can have a value of Any, IP address, service tag, or Application security group. A service tag is a label for a group of defined groups, such as the Internet, virtual network, etc. The destination can be Any, IP, VNet, Application security groupa.

Example: Disabled all (ANY) inbound with priority 1000 and enabled port 80 from the Internet with priority 500. In this case, if an NSG packet from the Internet arrives on port 80 of the destination IP, it will be allowed, anything else will be dropped. If the inbound prohibition had a higher priority (lower number), communication to port 80 would also be discarded.

Network topologies

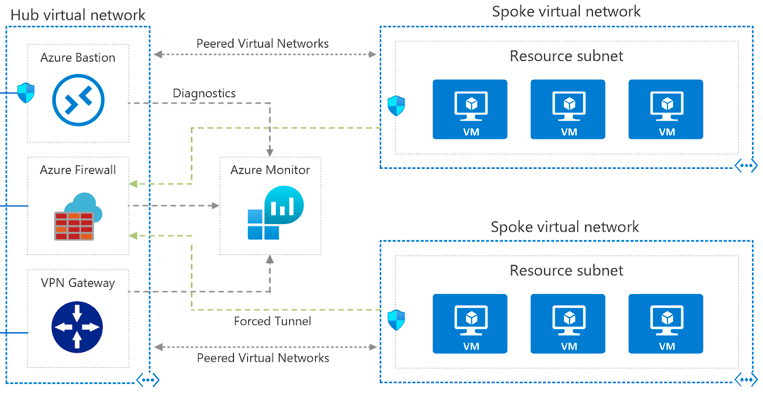

Probably the most common topology that we encounter in the cloud in ORBIT, is hub and spoke. In this topology there is one central VNet/VPC that is used for VPN connections (either S2S or P2S) and in which shared services for other networks are connected.

This network is then set to peer between the others. By not setting up peering between the VNets (always only between the hub network and the spoke network) and not enabling forwarding on the hub network peering, the VNets remain isolated from each other. A minor disadvantage is that if a new network is added, its peering with the hub network must always be set up as well.

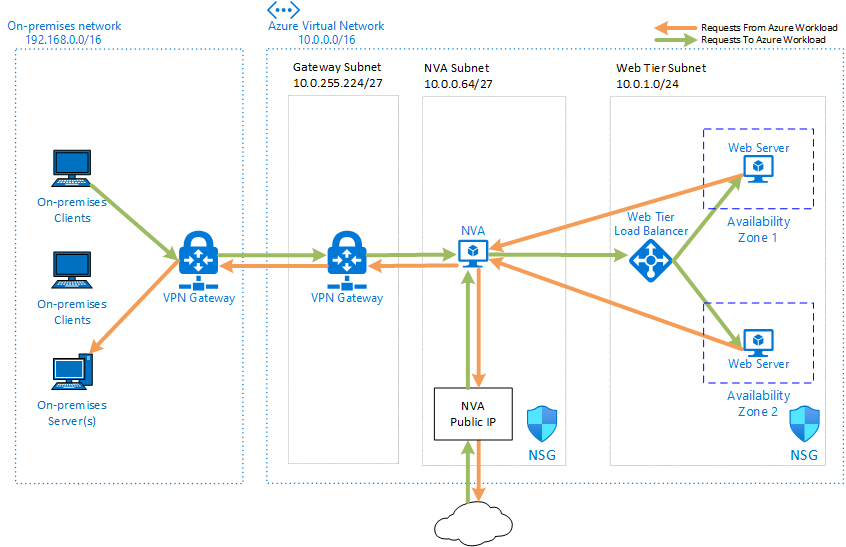

In addition to hub to spoke topologies, we still often encounter the concept of single NVA (network virtual appliances), where traffic is routed through a security appliance to control both outgoing and incoming traffic. NVA is in this case a single point of failure, so it must be deployed in HA mode. NVA ensures that only traffic meeting clearly defined criteria will pass.

Service and private lines

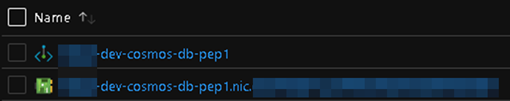

In the previous paragraphs I mentioned the possible integration of PaaS services with client networks. This can be done in two ways, which differ in terms of security. The first way is service endpointto allow a connection to a subnet of a certain group services. The second option is private endpointwhich maps Specifically resource directly into the VNet - a new NIC is created for it (similar to the VM).

While the service endpoint is set on the subnet, the private endpoint is set directly on the PaaS service.

Both options reduce the risk of exposing resources directly to the Internet and allow security group connections (even at the NIC level for PEP). In neither case does the communication go outside the provider's network.

But there are two major differences. One is in scope: a private endpoint connects a specific resource and creates a NIC for it, a service endpoint enables connections for all resources of the same type (e.g. all storage accounts in a given tenant).

The second is in the connection method: while the service endpoint essentially "extends" the existing address range of the network with the service (communication remains on the provider's backbone), the private endpoint extends the specific service to the VNet.

Protection against DDoS attacks

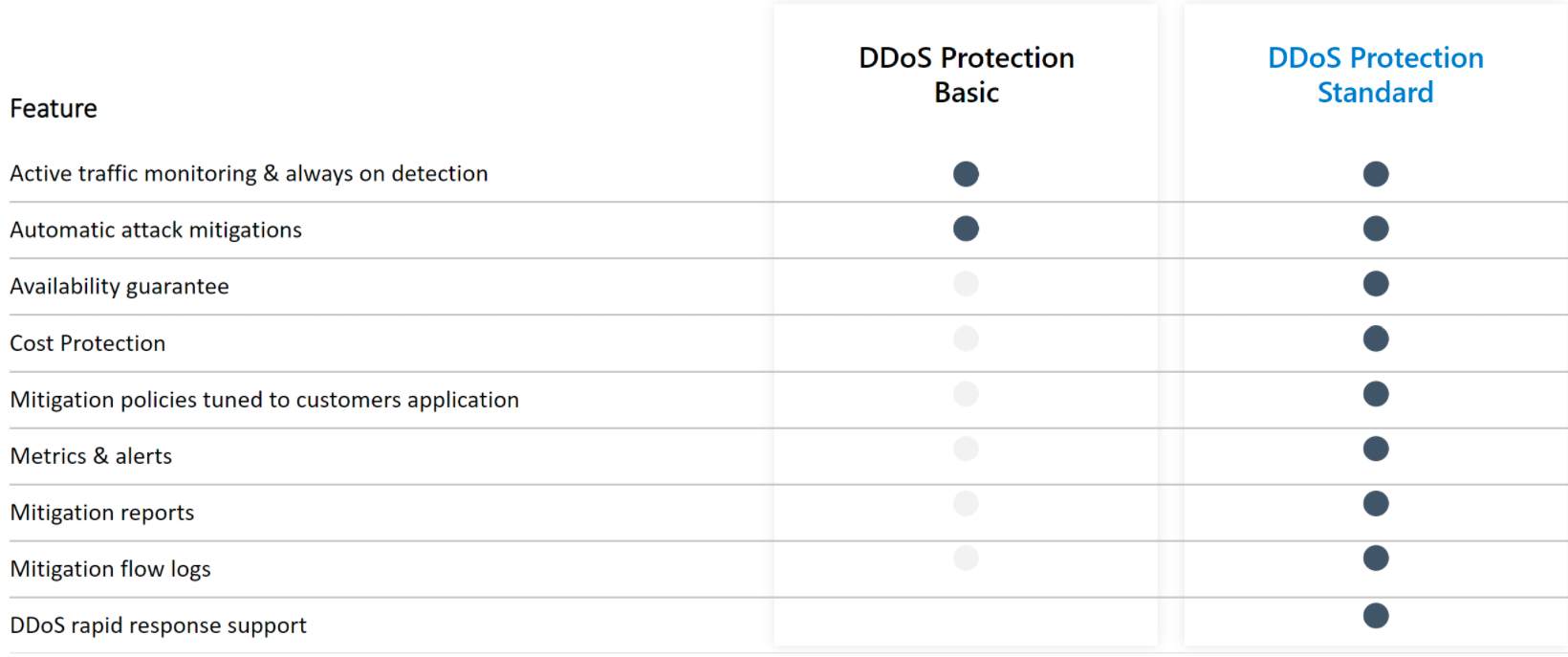

All Azure and AWS resources are protected by basic DDoS protection, which is free and without configuration. In Azure, it's DDoS Basic, in AWS Shield Standard. There are also paid options - in Azure DDoS Standard and in AWS Shield Advanced. The paid options are not among the cheapest services, but they do bring some added functionality over the basic plan - see the following image.

The price of the paid DDoS protection option, which covers up to 100 public IP addresses, is for both providers approx. 3000 USD per month. Each additional resource or protected public IP address above the limit costs $30 per month. The good thing is that you can have one Standard plan for the whole tenant across sub-scripts.

Application load balancing

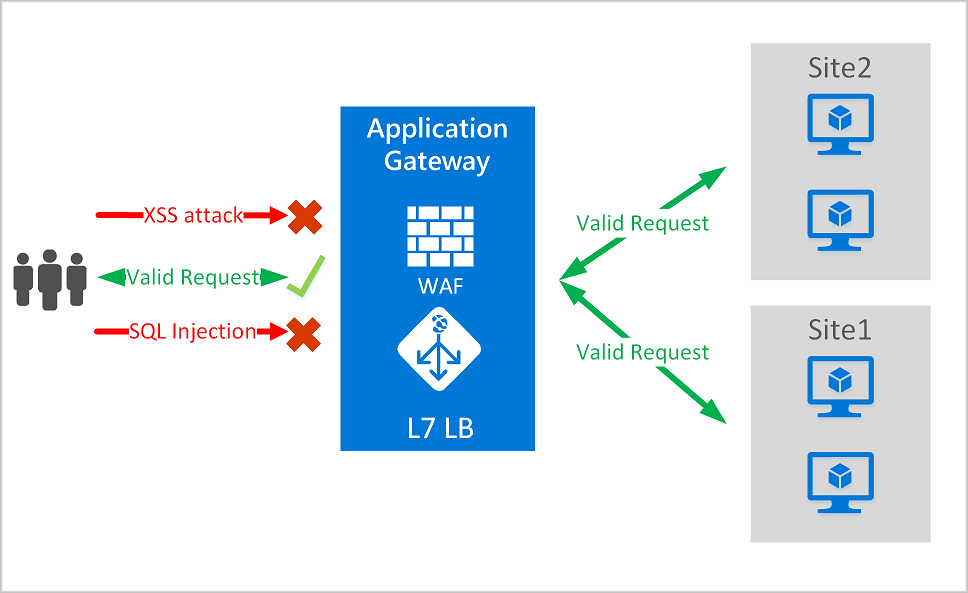

Load balancers are also an important part of networks. In addition to the classic ones that work on the L4 layer, cloud providers also provide more advanced application load balancers (in the Azure application gateway), which operate at layer 7 (L7) of the OSI model and thus support some additional features such as cookie-based session affinity.

Traditional load balancers at the fourth transport layer operate at the TCP/UDP level and route traffic according to the source IP address and port to the destination IP address and port. In contrast, application load balancers can make decisions based on a specific HTTP request. Another example is routing by incoming URL.

Application load balancers can also function as an application firewall or web firewall. Thanks to the fact that they operate at the application layer, they are significantly more advanced protection than, for example, the aforementioned security groups. Web application firewall (WAF) is a centralized protection against various exploits and other vulnerabilities (examples of attacks can be SQL injection or cross-site scripting).

Accelerate and smooth web application performance

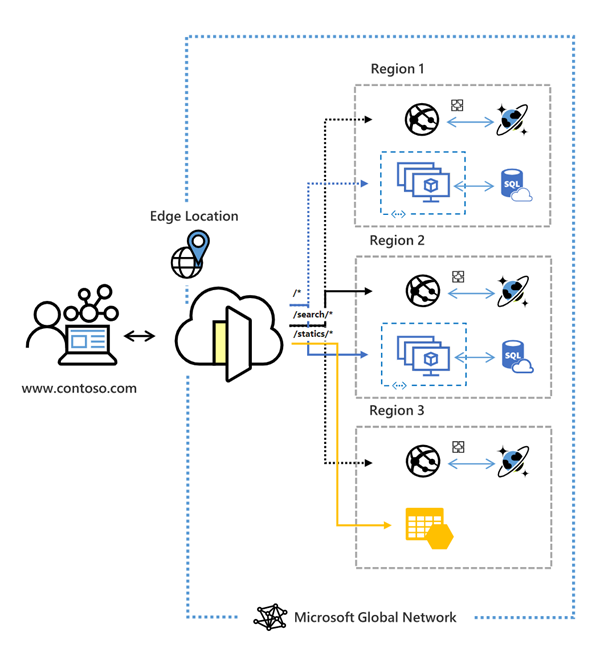

Azure FrontDoor a AWS Global Accelerator are global services operating at Layer 7 (L7) to improve performance for target users around the world. It is claimed that these services can improve performance by up to 60 %.

Some of the benefits of Azure Front Door:

- application performance acceleration using split TCP-based anycast protocol

- intelligent monitoring of backend resource health

- URL-path based routing

- hosting multiple websites for an efficient application infrastructure

- cookie-based session affinity

- SSL offloading and certificate management

- defining your own domain

- application security with integrated Web Application Firewall (WAF)

- redirecting HTTP traffic to HTTPS with URL redirect

- URL rewrite

- Native support for end-to-end IPv6 connections and HTTP/2 protocol

Traffic manager & Route 53

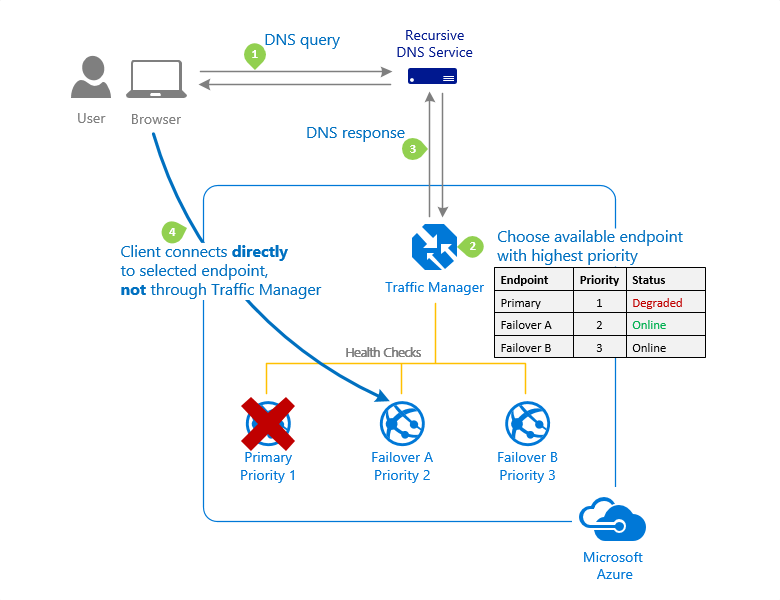

Now we are getting outside the OSI model. Traffic manager (Azure) or Route 53 (AWS) services do not operate on any of its layers, but are DNS-based.

Traffic manager is a DNS-based load balancer that allows you to distribute traffic for applications accessible from the Internet across regions. It also provides high availability and fast response for these public endpoints.

What does it all mean? Simply, it's a way to direct clients to the appropriate endpoints. The traffic manager has several options to do this:

- Priority routing

- Weight routing

- Performance routing

- Geographical routing

- Multivalue routing

- Subnet routing

These methods can be combined to increase the complexity of the resulting scenario to make it as flexible and sophisticated as possible.

At the same time, the health of each region is automatically monitored and traffic is redirected if an outage occurs.

Conclusion

Today's article was a bit more technical and extensive than the previous one. However, we went over all the key components of cloud networks and corresponding services that can operate at different layers of the OSI model (specifically L3-L7).

The target concept of cloud networking can vary greatly depending on the application, corporate and security policies, as well as limited budgets. Even for networks, it is valid to use only what makes sense to us and brings sufficient benefits considering its price. We may like a paid DDoS plan, but if we are a startup, we will probably do without it and prefer to save 3000 USD (about 65k CZK) per month.

Whether you are more or less involved in networking and cloud, I believe today's article has revealed the benefits you can achieve with the right network architecture.

If you're interested in other topics related to the cloud, check out our series Cloud Encyclopedia - A quick guide to the cloud.