Storing data in the cloud: make sure you know what, why, where and how

Cloud services provide businesses with a number of benefits, including increased flexibility, scalability and easy access to data anywhere, anytime. In this article, we'll look at the importance of storing data in the cloud, how to do it right, and why businesses should consider Azure and AWS tools.

Filip Kamenar

In today's digital era, storing data in the cloud is an increasingly popular choice for businesses and individuals. The days of storing personal data on various flash drives (which could be lost at the first opportunity) are long gone.

We all know and overwhelmingly use personal cloud storage like Apple's iCloud, Microsoft's OneDrive or Google Drive. It's easy, secure, and we get used to the availability of our data on all devices very quickly.

And the same is true for corporate solutions.

What does cloud storage mean?

Putting data in the cloud means that the company moves its data and information from physical storagesuch as hard drives, server farms or data centres, to the cloud provider's virtual space.

This includes transferring and storing data on a remote server that the company securely connects to via the internet, VPN or private data line. As a result, companies does not have to invest expensively in its own hardware and infrastructurewhich brings them several advantages.

Cloud service providers' servers are located in data centres around the world, ensuring redundancy and good data availability. The data centre network is very dense, so a company can choose the geographically closest data centre to achieve low latency.

Data is accessed via APIs, web browser applications or dedicated applications.

Benefits of storing data in the cloud

One of the main advantages of storing corporate data in the cloud is scalability and flexibility. Cloud providers like AWS and Azure offer different service levels so you can adjust the size and performance of your data storage according to current needs. You can react quickly to changes and maintain the right storage capacity without unnecessary costs.

Data in the cloud is also always secured by encryption and other measures to minimise the risk of loss or leakage. Cloud providers offer ready-made solutions for regular data backupthat protect data even in the event of an accident or equipment failure (see article Cloud backup: why, when, what and, most importantly, how?).

For much of cloud storage, it is true that you pay only for the data actually stored, not for the storage capacity provided (there are exceptions to this, which we will explain later). This eliminates the need to make complex calculations in advance about how much data will need to be stored and to specify the storage parameters accordingly.

Let's take a look at four scenarios our customers often encounter and how they can be addressed in on-premise and cloud environments.

1. Oversized storage capacity

Has on-premise storage turned out to be oversized? Then you're paying unnecessarily for capacity you don't need and could have spent the money in other ways.

- Solution in the cloud: In the cloud, this situation doesn't arise because you don't pay for unused capacity. You only pay for the data you actually store.

2. Insufficient storage capacity

Hundreds of megabytes became gigabytes? Have you found that on-premise storage capacity is insufficient and you need to expand your storage? This can cause complications in the budget, as hardware investments are often planned well in advance. There may also be a technology complication where for some reason expansion is not possible.

- Solution in the cloud: The Cloud handles this situation for you, as you do not pre-order capacity. From the customer's perspective, cloud storage capacity is virtually "unlimited" and it's up to you how much data you store.

3. Unused storage

The implementation of the application for which you built the datastore has been cancelled, and the hardware resources for the datastore are unused? You may be able to use the capacity for another application, but often the storage is left empty and the financial investment goes to waste.

- Solution in the cloud: If the same situation occurs in the cloud, just delete the unnecessary data. At the same moment, you would stop paying for the storage. In the event that you need to keep the data in the cloud for future project recovery, you can use a data service suitable for archiving and thus significantly reduce costs.

4. Problem with data backup and recovery

Enterprise customers in on-premise environments often face the challenge of data backup and recovery. Traditional backup methods can be challenging and unreliable, increasing the risk of data loss. Additionally, when data volumes increase, it can be difficult to keep up with backup needs.

- Solution in the cloud: Cloud providers offer automatic and regular data backups, which reduces the risk of data loss and makes data recovery easier. For corporate customers, this means higher reliability and a lower administrative burden because the providers take care of everything themselves.

Where to store data in the cloud or choosing the right service

We've explained what cloud storage is, but where do we go with it now? Which services to choose? In order to make that decision, we need to be clear first, which data we want to store, and then select the most appropriate service for that use case.

First, let's look at a few usage scenarios and then choose the right service from AWS or Azure. If we choose the wrong one, the whole data storage solution might be unnecessarily expensive and might not meet the speed or functionality requirements.

Signpost to choosing the right data storage service

- Need to replace a shared network drive that the whole office uses -> Amazon FSx or Azure Files

- Back up to data tapes regularly -> Amazon Tape Gateway a Amazon S3

- You need fast block storage to connect to a virtual server -> Amazon EBS or Azure managed disks

- You need storage that can be accessed by multiple virtual servers at the same time -> Amazon EFS or Azure Files

- You want to store static data from your web application -> Amazon S3 or Azure Blob storage

- Do you need to archive data for years -> Amazon S3 Glacier or Azure Archive Blob storage

- You need object storage available from the cloud and on-premise -> Amazon S3 a Storage Gateway or Azure StorSimple

- Want to replace a locally hosted Windows server file share -> Amazon FSx for Windows File Server or Azure Files

- Need shared storage with sub millisecond response time -> Amazon FSx for Lustre or Azure NetApp Files

How do I store data in the cloud? A sample of typical cases

1. Migrating on-premises fileshare to the cloud

Your company has a traditional fileshare where office workers store, share and manage their documents. However, with the increase in mobility and remote working, accessing these files is becoming restrictive and impractical. So you'd like to bring this infrastructure to the cloud, with the promise of easy and secure access to files from anywhere.

- Solution in the cloud: For this scenario, it is ideal to choose a cloud service such as Azure Files or Amazon FSx. Both offer file storage as a service (File Storage as a Service), which means you don't have to manage the infrastructure yourself, but rely on the cloud platform of your choice.

- The optimal solution is to connect the corporate network to a cloud provider. The end user will not be able to tell the difference (whether the corporate fileshare is in the cloud or on-premise) because the mapping of folders is done in the same way. User authentication is possible using a federated corporate identity such as e.g. Active Directory.

2. Migrate an application with data storage on a local disk array

Your application hosted in an on-premise environment uses traditional local hard disk-based storage. Your existing solution is nearing the end of its life, which means increased maintenance and HW refresh costs. In particular, the recovery of HW, especially disks, is costly and requires additional human resources.

In the next few years, the application is expected to expand, so the amount of stored data will also increase. However, in the current situation it is not possible to say what the future storage capacity required will be.

- Solution in the cloud: You can use the services for application storage Amazon EBS or Azure Managed Disks. The advantage is the ability to continuously adjust the capacity of individual volumes, so you can assign only as much capacity to the application as will actually be used.

In the case of future growth, you can increase the storage capacity as needed, and if the amount of stored data decreases, you can simply reduce the capacity.

3. Data backup and disaster recovery

Your company has critical data stored on local servers. Unfortunately, in the past you have suffered a disaster and lost some of your data. You would like to ensure regular and reliable data backup and quick recovery in case of a crisis situation.

- Solution in the cloud: Cloud platforms like Azure and AWS provide a wide range of services for data backup and recovery. Typical examples are Azure Backup a AWS Backupthat allow you to set different backup policies.

If a disaster strikes, you can recover your data from the cloud, minimising disruption to your business. The storage for these backups can be block storage Amazon S3 or Azure Blob storage offering different classes of data storage - from short-term to long-term archiving.

4. On-premise storage expansion combined with hybrid cloud storage

Your on-premise data storage is running out of capacity and needs to be increased. However, this was not foreseen in the IT budget and there are not enough funds for storage expansion. However, it is also not possible to wait until the next investment period.

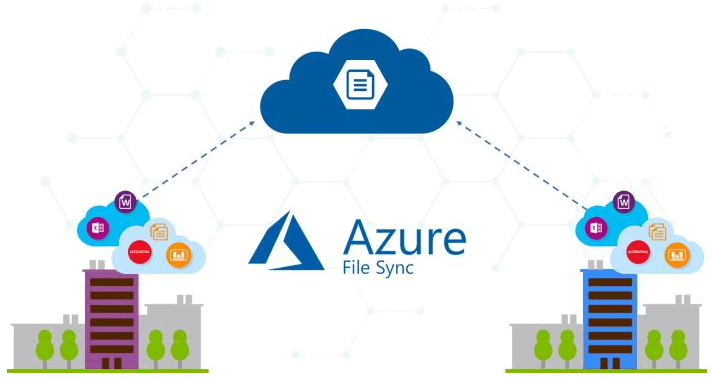

- Solution in the cloud: Existing on-premise can be maintained and capacity can be expanded using hybrid storage. In the case of AWS, the service Storage Gateway, in the case of Azure service File Sync.

In an on-premise environment, you deploy a virtual gateway that is able to provide local infrastructure with access to cloud storage. This allows you to expand your enterprise storage capacity without unnecessary infrastructure costs. And as usual, you'll only pay for the data you store in the cloud.

Selected AWS and Azure cloud storage services and their purpose

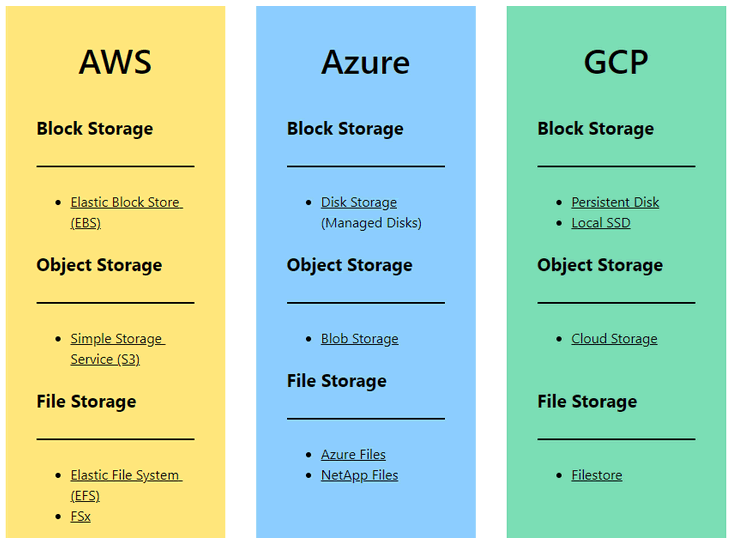

Block storage

In a cloud environment, we understand object stores as environments where data is stored as objects that contain the data itself along with metadata and a unique identifier. These types of repositories offer a cost-effective solution for storing unstructured data, enabling data backup, web hosting, large dataset analysis, content distribution, application data storage, and easy file sharing.

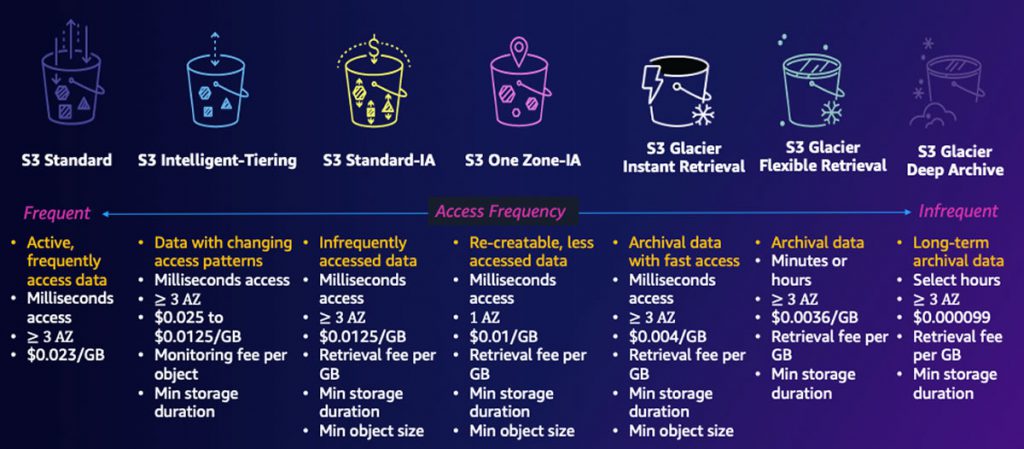

AWS - Amazon S3 (Simple Storage Service)

Amazon S3 is a scalable and resilient service for data storage and automatic replication between AWS availability zones. Amazon expresses resiliency in 11 nines, which means that data will remain stored with a probability of 99.999999999 %. S3 provides a simple web interface for storing and managing files in "buckets" (simplified as folders). Alternatively, S3 can also be operated from on-premise as network storage using the Storage Gateway service.

Azure - Blob storage

V Blob storage data is stored as objects known as "blobs". The service supports the storage and management of vast amounts of unstructured data, and individual blobs can be up to hundreds of terabytes in size. Blob storage is highly resilient, with the ability to create multiple redundant copies of data stored in different locations (see the article for more details High availability of services in the cloud - how to do it?), even in the event of a hardware failure at one point. The Blob offers several different classes for data storage (hot, cool, cold, archive), allowing cost optimization by moving less frequently accessed data to a lower cost class.

Storage for long-term data archiving

AWS - Amazon S3 Glacier

S3 Glacier is an S3 storage class optimized for long-term data retention and archiving at low cost. Data is stored in the form of "archives" and is available for recovery when needed. S3 Glacier offers different levels of availability and data recovery options depending on requirements and costs.

Azure - Blob storage archive

As with S3 Glacierand Blob storage offers a class of storage suitable for long-term data storage and archiving. In this case, it is the "archive" class, which is designed to store data for 180 days or more.

File systems or fileshare

AWS - Amazon FSx

Amazon FSx is a service that provides fully managed file systems for a variety of uses. FSx offers fast and fully managed file sharing in the cloud. It provides compatibility with file systems such as Windows File Server a Chandeliers. FSx integrates with other AWS services, making it easy to use. It can be easily connected to specific user stations as well as local shared storage.

AWS - Amazon EFS

Amazon EFS (Elastic File System) is a managed file system providing shared file storage for multiple instances and containers simultaneously. EFS is easily scalable and highly available. It is suitable for applications that require shared access to data.

Azure - Files

Fully managed service Files allows users to store and access files using SMB and NFS protocols. It supports features such as snapshots for recovery at any given time, access control via Active Directory Alternatively Azure Active Directory and integration with the service Azure Backup for data protection and backup. It eliminates the need to manage file servers and provides a flexible and reliable way to store and access files in the cloud.

Azure - NetApp Files

NetApp Files is a service built using NetApp technology. It is suitable for file sharing, especially when the application requires high-performance computing (HPC), reliability and low latency. NetApp Files are often used when hosting SAP HANA in the cloud.

Virtual server disks

AWS - Amazon EBS

Amazon EBS (Elastic Block Storage) is a service that provides block storage for virtual servers in the cloud. EBS allows you to store and connect data disks to virtual servers. It provides high availability, resilience and backup capabilities. EBS is ideal for demanding applications that require fast and reliable block storage.

Azure - Managed disks

Managed Drives simplifies the management of virtual machine disks. It allows you to create, manage, and scale disk resources without having to manually manage the underlying storage infrastructure. Disk Management offers three types of disks, each designed for different performance and cost requirements. Key benefits include features such as automatic replication for high availability, data backup, and integration with Azure virtual machines.

Hybrid storage

AWS - Storage Gateway

AWS Storage Gateway is a service that allows you to integrate on-premise storage with the AWS cloud. It provides tools to create a connection between your existing storage device and AWS storage. It distinguishes between three types of gateways: File Gateway (allows access to file data in the cloud as network storage), Volume Gateway (provides block storage that can be used as a virtual disk for your applications) and Tape Gateway (allows data archiving to virtual tapes).

Azure - File Sync

Azure File Sync synchronizes on-premise file storage (fileshare) with Azure Files in the cloud. It offers a comprehensive and centralized way to manage and access files in multiple locations and provides a hybrid cloud solution for file sharing.

Bulk move large amounts of data from on-premise to the cloud

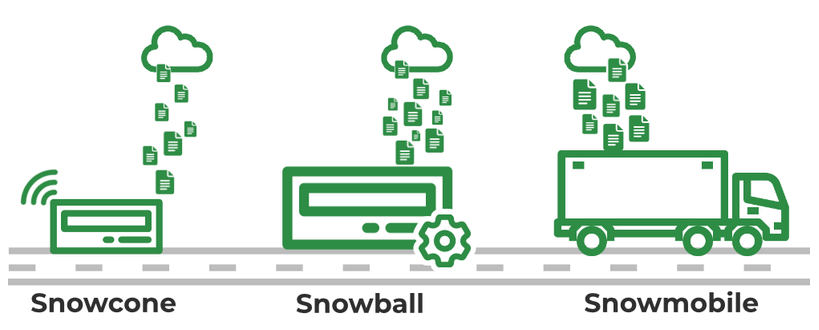

AWS - Snowcone, Snowball, Snowmobile

AWS Snowcone is a physical device used to move large amounts of data to the AWS cloud. Snowball is delivered to businesses where it is then connected to the local network, allowing for easy and fast data movement. Once populated Snowcon is returned to AWS, where the data is automatically imported into the cloud. Capacity Snowcon is 8 TB. The larger alternative is then Snowball with a capacity of 80 TB, and for the most demanding applications there is Snowmobilewhich is a truck with data storage that can hold up to 100 PB of data.

Azure - Data Box

I Data Box is a physical device that is used to move data to the cloud, here to Azure. The device is delivered to a company that uploads its data to it and then sends it back to Azure, where the data is then uploaded to the cloud. Option Drive has a capacity of 40 TB, Box 100 TB and Box Heavy up to 1 PB.

There is also a variant Data Box Gatewaywhich is a virtual appliance that can be used to move data from on-premise to Azure.

Already know how to store data in the cloud?

Storing data in the cloud allows businesses to manage and use your data more efficiently, increase information availability and security, and reduce IT infrastructure costs. Each of the cloud storage services described in this article is suitable for a different use case. It is therefore important to consider your specific data storage requirements and choose the right service accordingly.

If you are hesitating, we will be happy to help you with your choice.